| Generations |

Pre-3D (-1995) Texture Mappers (1995-1999) Fixed T&L, Early Shaders (2000-2002) Shader Model 2.0/3.0 (2003-2007) Unified Shaders (DX10-11) Featureset determines where a card will go, not its year of introduction |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Unified Shader? GPGPU? |

Around 2004 time, when SM3.0 was new and cool, we were noticing from framerate profiling that some frames, roughly one or two a second, took far more rendering time than all others. This caused "micro-stutter", an uneven framerate. The shader profile showed that these frames were much more vertex shader intensive than the others and GPUs had much less vertex shader hardware than pixel shader hardware, because most frames needed about a four to one mix. As shader units were getting more and more complex, it made sense for each shader to be able to handle both vertex and pixel programs. ATI introduced this with the Radeon HD2xxx series, though due to other "features" on the GPU, they were generally slower than the previous generation X1xxx series. Nvidia jumped on board with the Geforce 8 series, with 500 MADD GFLOPS on the top end Geforce 8800 parts. The teraflop GPU was in sight. The first GPU with enough shaders running fast enough to be able to manage one trillion operations per second was the AMD Radeon HD4850, though the previous generation 3870 X2 had surpassed this, it was two GPUs on one card. Nvidia struggled during this generation, while the Geforce GTX285 did manage to hit one TFLOPS, it was expensive, noisy, power hungry and rare. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MADD? FMA? |

The most complex operation an FPU historically did was the "multiply-add" (MADD) or "fused-multiply-add" (FMA). It's doing a multiply and an add at the same time. The operation is effectively an accumulate on value a where a = a + (b x c) and the distinction is that intermediate value b x c in a MADD is rounded to final precision before it is added to a, while in an FMA, the intermediate is at a higher precision and not rounded before the add, only the final value is rounded. The real-world distinction is minor and usually ignorable but for FMA being performed in a single step, so typically twice as fast. AMD introduced FMA support in TeraScale 2 (Evergreen, 2009) and Nvidia in Fermi (2010). A common driver optimisation is to promote MADD operations to FMA. For consistency's sake, where we list "MADD GFLOPS" or "FMA GFLOPS", we may mean either, whichever one is fastest on the given hardware. An FMA, despite being treated as one operation, is actually two and therefore if a GPU can do one billion FMAs a second, its GFLOPS (giga-floating point operations per second, 1 GFLOPS is one billion ops per second) is 2. However, FMA performance does carry the implication of it being "two in one", so a GPU's throughput in other operations is half that. FMA is important, dot products and matrix multiplication intensively use FMA, as do convolutions, as in a convolutional neural network. FMA also allows more efficient calculations of square roots and divisions. So, if we work out the throughput of a GPU in purely number of operations it can do in any given second (let's have a hypothetical RTX 2070 running at 2.0 GHz)... CUDA cores = 2304 Clock = 2.0 (as this is in GHz, our result will be in billions of operations per second, GFLOPS) Nice and simply, we just multiply the number of cores by the clock. This assumes all cores do useful work in every clock tick for one second. This almost never really happens. 2304 x 2.0 = 4,608 GFLOPS If you just run a lot of parallel very tight loops of FP32 adds or multiplies in CUDA code, this is the number you'll measure. If you start running real code, where the compiler can throw FMA into the mix (and it will, this is a very low hanging fruit for compiler optimisation), you'll find your results climb above the theoretical maximum we calculated just now. If, however, your measurements are clever and count an FMA as one operation, they will not. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

PNY GeForce 8800 GTS 512 MB - 2007 It's fitting that we lead this article with the GeForce 8800, which was the first DirectX 10 class GPU, the first use of unified shaders, the first implementation of Nvidia's new "Tesla" architecture, which was still called G80 back then. What we understand a modern GPU to be, this was the first. As a popular card, there were a lot of variants, and PNY's lot usually used its "XLR8" branding, but this one used Nvidia's reference branding. What G80 did was make very simple ALUs which could be double-pumped. The G80 architecture did SIMD over eight "stream processors" (later to be called CUDA cores) which were natively FP32. With 16 "streaming multiprocessors" (SMs), G80 had 128 stream processors. It clocked them to 1,200 "MHz-equivalent" in most G80s, but the rest of the GPU clocked at a more sensible 600 MHz. This double pumped ALU stack was using clock doubling, so it triggered on the rising and falling edges of the clock. The shader domain on G80/Tesla/Fermi ran the ALUs at the double-pumped "shader domain" clock, but the supporting structures, such as cache, registry, flow control, special functions, dispatch and retire only ran at the base clock. The original 8800 GTS had a 320 MB variant with 12 SMs and a 640 MB variant with 14 SMs. They used the G80 GPU on TSMC's 90 nm process. When 65 nm came along, Nvidia was soon transitioning over to it, and the G92 GPU was the result. It had the same 16x8 streaming processor configuration, but was a lot smaller, 324 mm^2 instead of G80's 480. This GTS 8800 512MB was a G92 and was configured almost identically to the GeForce 9800 GTX. In the 8800 GTS 512 MB, G92 was clocked higher than its G80 predecessor, with a shader domain of 1625 MHz and a GPU clock of 812.5 MHz. G92 was not a direct die shrink of G80, it was a little more capable in its CUDA configuration (Compute Capability 1.1, not 1.0), but the 55 nm G92b was a direct die shrink of G92. G92 improved the PureVideo IP block, meaning VC-1 and H.264 could be GPU assisted. G92 also had an alarming incompatibility with PCIe 1.0a motherboards, which led to the video card not initialising during boot. This caused a very high return rate, as Nvidia's backwards compatibility (something the PCIe specification demands) only went as far back as PCIe 1.1. Nvidia's spec for the memory was 820 MHz GDDR3, but most vendors used faster RAM. The 8800 and 9800 series were just before Nvidia started to lose the plot a little. Tesla's extreme shader clocks served it well for small pixel shader programs of the sort DX9 would use. G80 was up against ATI's RV670, which had 320 cores, but in a VLIW-5 design. While VLIW-5 was harder to get peak performance from, it used fewer transistors, less die area and so resulted in a smaller, cheaper GPU - It also used much less power. A product line as popular as the 8800 series was means it was influential. Games even as far as 2015, eight years later, would often say a 8800 GT or GTS was the minimum requirement. While ATI's RV670 in, say, Radeon HD 3870 (8800 GTS's most direct competitor) had a board power of just over 100 watts, 8800 GTS was rated at 150 watts. So far, so good. By the time reach 2009, two years later, we have Radeon HD 5870's Cypress GPU against Tesla's swansong, GT200B, we have ATI's 180 watt board power against Nvidia's 238 watt GeForce GTX 285. Ultimately it was not possible for Nvidia to keep throwing more and more power at the problem. Nvidia PureVideo (VDPAU), the SIP block doing video decode tasks, was second generation, which could handle H.264 up to 2K resolution, but had only partial VC-1 support. MPEG-2 and MPEG-4 SP/ASP were fully handled. Core: 16 ROPs, 32 TMUs, 650MHz (20.8 billion texels per second, 10.4 billion pixels per second) RAM: 256 bit GDDR3, 970MHz, 62,080MB/s Shader: 16x Unified shader 5.0 (16 SIMD of 8, 128 total) MADD GFLOPS: 416 Most recent driver is 342.01 from 2016, when G80/G9x class Tesla support was dropped. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

MSI Radeon HD3450 (V118 R3450-TD256H Hewlett Packard OEM variant) - 2007 All I had for this was an Underwriters Laboratories certification number, E96016 which identified it as nothing beyond the basic PCB manufactured by Topsearch of Hong Kong and the model number, V118. That's not a lot to be going on. It came out of a HP Pavillion desktop, pretty typical consumer junk and certainly nothing intended for gaming, so it's not going to be anything spectacular (the size of the PCB says that too). All hardware tells a story, it's just a matter of listening. The silkscreening on the PCB tells us that it's got guidelines for quite a few different coolers, this PCB is meant for a family of video cards perhaps all based around the same GPU or similar GPUs. The VGA connector can be omitted easily and isn't part of the PCB - It's aimed at OEMs who know what they want and need no flexibility. It's a Radeon HD3450 with 256MB manufactured by MSI using a Topsearch manufactured PCB. It's amazing what the right search terms in Google can let you infer, isn't it? MSI's standard retail part comes with a large passive heatsink and S-video out, but OEMs can get whatever variant of a design they like if it'll seal a deal for thousands of units. These things, the V118 model, were retailing at about $20. Under the heatsink is a 600MHz RV620 (A near-direct die shrink to 55nm of the HD2400's 65nm RV610) GPU feeding 1000MHz GDDR2 memory, but the good ends there. The memory is 64 bit, giving a meagre 8GB/s bandwidth and the core has only four ROPs - It's a single quad. Unified shader 4.1 is present, 40 units, but they're pretty slow. Core: RV620 with 4 ROPs, 1 TMU per ROP, 600MHz (2.4 billion texels per second, 2.4 billion pixels per second) RAM: 64 bit DDR2, 1000MHz, 8000MB/s Shader: 1x Unified shader 4.1 MADD GFLOPS: 48 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

ATI Radeon HD 3450 256 MB (AMD B629 Dell OEM variant as 'W337G') - 2007 ATI's OEM parts were never easy to identify. This one carried its FCC identification as ADVANCED MICRO DEVICES MODEL: B629, dating it to after AMD's acquisition of ATI. The PCB carries a date code showing it was made in week 19, 2010. The rear connector carries a DMS-59 output and the standard-for-the-time S-Video output. Looking at the size and layout of the PCB shows us that it is clearly a derivative of the one above with many components sharing identical placement. Notably, this one has a half-height bracket for the Dell Optiplex SFF 360/380/755/760/780. The performance of the Radeon HD 3450's RV620 GPU was, to a word, lacking. It carried DDR2 memory as standard, running it at 500 MHz for just 8 GB/s memory bandwidth. The memory was provided by SK Hynix as four BGA packages, two on each side. At 600 MHz the GPU itself was never going to set any records, especially with just one R600-architecture shader package (40 individual shaders). Getting more performance out of this was an exercise in how highly the RAM would clock. Even with the down-clocked GPU, 8 GB/s plain was not enough. The GPU itself would normally hit 700-800 MHz (and in the HD 3470, the same GPU was running at 800 MHz on the same PCB, with the same cooler!) but this didn't help when the RAM was slow 64 bit DDR2. When it arrived here, in 2019, it had been sat in a corporate stock room for years and was completely unused, not a single grain of dust on the fan. On power on, as was common for GPUs of the day, the fan spins up to maximum before winding back. This is really quite noisy for such a small fan! No amount of persuasion or different systems got anything recognising it as a video card, or even that a video card was present. We'll talk about the GPU architecture on this one, since the actual card was not functional. R600 used a ring bus to connect the ROPs to the shader cores, a rather unusual architecture, and took a VLIW-5 instruction set later back-named TeraScale 1. This means each shader block of 40 was eight individual cores, which could handle a single VLIW-5 instruction to its five execution units. TeraScale 2 would increase this to sixteen cores (and 80 shaders per block). R600's ring bus also, as noted, decoupled the ROPs from the execution units, but the texture units were "off to one side" of the ring bus, so each group of execution units did not have its own samplers and combining samples with pixels to do MSAA meant first colour data exiting the shaders into the ring bus, then into the texture units, then back to the ring bus to head to the ROPs to be finally MSAA sampled. This was inefficient and, in the original R600, plain didn't work. Even in the revised silicon, which RV620 here is based on, it was best to avoid antialiasing. The RV620 also improved the UVD video block from "1.0" to "2.0", adding better video decoding to the GPU. Physically, the GPU was 67 mm^2 and contained 181 million features. At 67 mm^2, it barely cost anything to make. It would be AMD's smallest GPU until Cedar/RV810, three years later in 2010, at 59 mm^2. A drop in the price of bulk silicon from TSMC after 28 nm and the general increase in very low end GPU cost meant that no subsequent GPU has been this small, though Nvidia's 77 mm^2 GP108 came close. Powerful IGPs from AMD and Intel embedded in the CPUs have put paid to the very small die entry level GPU segment. Core: RV620 with 4 ROPs, 1 TMU per ROP, 600MHz (2.4 billion texels per second, 2.4 billion pixels per second) RAM: 64 bit DDR2, 1000MHz, 8000MB/s Shader: 1x Unified shader 4.1 MADD GFLOPS: 48 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

PowerColor Radeon HD 3850 AGP 512MB - 2007 If one were to chart all the GPUs made since the early DX7 era, he would notice a near-perfect rule applying to the industry. One architecture is used for two product lines. For example, the NV40 architecture was introduced with Geforce 6 and then refined and extended for Geforce 7. There's an entire list covering the whole history of the industry. Observe:

What's happening is that a new GPU architecture is made on an existing silicon process, one well characterised and understood. ATI's R600 was on the known 80nm half-node process, but TSMC quickly made 65 nm and 55 nm processes available. The lower end of ATI's Radeon HD 2000 series were all 65 nm, then the 3000 series was 55 nm. The die shrink gives better clocking, lower cost and better performance, even if all else is equal. It's also an opportunity for bugfixing and fitting in those last few features which weren't quite ready first time around. In ATI's case, the Radeon HD 2 and 3 series are the same GPUs. Here's a chart of what uses what:

Whew! ATI used the R600 architecture across three product lines, making it almost as venerable as the R300, which was used across three lines and in numerous mobile and IGPs. ATI used R300 so much because it was powerful and a very good baseline. So what of R600? To a word, it sucked. The top end 2900 XTX was beaten by the previous generation X1900 XT, let alone the X1950s and the top of the line X1950 XTX. It suffered a catastrophic performance penalty from enabling antialiasing and Geforce 7 could beat it pretty much across the board: Yet here was a product meant to be taking on Geforce 8! While this was released in 2008 (announced in January, but was in the channel by December the previous year), the PCIe version was 2007, so that is listed as the year here. ATI did, however, throw the price really low. You could pick them up for £110 when new and even as I wrote this entry (August 2009) they were still in stock for around £75. There'd not been a really decent mid-range card since the Geforce 6600GT (though the Geforce 7900GT was fairly mid-range, it was overpriced) and the ageing Geforce 7 series was still available, it was not at the DirectX 10 level. The ATI Radeon HD 3850 pretty much owned the mid-range of the market. Better still, it was available in this AGP version which wasn't a cut down or in any way crippled model as most AGP versions were. Indeed, Sapphire's AGP version was overclocked a little and both Sapphire and PowerColor's AGP cards had 512 MB of memory, up from the standard 256 MB. The PowerColor card used this very large heatsink but Sapphire used a slimline single-slot cooler. In early 2008, these were a damned good buy, especially for the ageing Athlon64 X2 (or Opteron) or older Pentium4 system which was still on AGP. It's also a worth a mention about the Unified Video Decoder here. ATI called it the "Xilleon video processor" (because everything ATI branded was "-eon"), which was based on a licensed Tensilica Xtensa LX. It didn't offer MPEG-2 at this stage (MPEG-2 was easily a CPU task by this time) or MPEG-4 SP/ASP, but instead the more complex H.264 and VC-1 decoding up to the requirement of BluRay and HD-DVD. This basic capability would be retained with minor enhancement up to and including the Radeon HD 5000 generation. Core: RV670 with 16 ROPs, 1 TMU per ROP, 670MHz (10.7 billion texels per second, 10.7 billion pixels per second) RAM: 256 bit GDDR3, 1660MHz, 53,100 MB/s Shader: 4x Unified shader 4.1 MADD GFLOPS: 429 Supplied by Doomlord |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

AMD Radeon HD 4870 - 2008 After losing their way somewhat with the Radeon HD 2xxx (R600) debacle, AMD were determined to set matters straight with the 3xxx and 4xxx series. The 3xxx were little more than bugfixed and refined 2xxx parts, comprising of RV620, 635 and 670 (though the RV630 was actually the Radeon HD 2600). ATI had postponed the R400/Loki project when it turned out to be far too ambitious and had simply bolted together two R360s to produce their R420, which powered the Radeon X800XT and most of the rest of that generation, sharing great commonality with the Radeon 9700 in which R300 had debuted. Indeed, a single quad of R300, evolved a little over the years, powers AMD's RS690 chipset onboard graphics as a version of RV370. By 2006, the R400 project still wasn't ready for release (ATI had been sidetracked in building chipsets, being bought by AMD) and R500, which R400's Loki project was now being labelled as, was still not ready. Instead, R520 was produced. It was quite innovative and the RV570, as the X1950, became one of the fastest things ever to go in an AGP slot. It also provided the XBox 360's graphics. It took until 2008 for what was the R400 to finally hit the streets as R600. All the hacks, tweaks and changes made to the chipset meant that it was barely working at all. Things as basic as antialiasing (which should have been handled by the ROPs) had to be done in shaders because the ROPs were rumouredly broken. This crippled the R600's shader throughput when antialiasing was in use and led to pathetically low benchmark scores. R700 corrected everything. Sporting unified shaders at 4.1 level (beyond DirectX 10) and eight hundred of them at that (these are raw ALUs, the RV770 contains ten shader pipelines, each with 16 cores, each core being 5 ALUs - It's more correct to say that RV770 contains 10 shaders, and 160 shader elements, what AMD call 'stream processors'), corrected the ring memory controller's latency issues and fixed the ROPs. After two generations of being uncompetitive, AMD were back in the ring with the RV710 (4450, 4470), RV730 (4650, 4670) and RV770 (4850, 4870). RV770's ROPs are again arranged in quads, each quad being a 4 pipeline design and having a 64 bit bus to the memory crossbar. Each pipeline has the equivalent of 2.5 texture mappers (can apply five textures in two passes, but only two in one pass) Worth comparing is a Radeon HD 2900XT: 742MHz core, 16 ROPs, 105.6GB/s of raw bandwidth, 320 shaders, but about a third of the performance of the 4870 - Even when shaders aren't being extensively utilized. Core: 16 ROPs, 40 TMUs, 750MHz (30.0 billion texels per second, 12.0 billion pixels per second) RAM: 256 bit GDDR4, 1800MHz, 115200MB/s (GDDR5 is dual ported, so is actually a form of QDR) Shader: 10x Unified shader 4.1 (80 units each) MADD GFLOPS: 1,260 It is apparently quite difficult to coax full performance out of the shaders, most synthetic benchmarks measure between 200 and 800 GFLOPS Thanks to Filoni for providing the part |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Nvidia Quadro FX 380 256 MB - 2008 The Quadro FX 380 used Nvidia's G96 GPU, and ran it at 450 MHz. It had 256 MB of GDDR3 RAM, running at 700 MHz. It used the Tesla architecure, had 16 shaders, 8 TMUs and 8 ROPs. It was rated for a very low 34 watt TDP. This meant it compared against the embarrasingly slow GeForce 9400 GT:

The significantly more powerful 9400 GT was also about a quarter of the price. The G96 GPU had four execution cores, each with 8 CUDA cores, but the Quadro FX 380 had half the entire GPU disabled. It, to a word, stank. Did you get ECC memory? No. Was it a top quality PCB? No, it was just a regular PNY manufactured PCB. Some components were rotated, like the VRM inductor and the RAM chips, but this is hardly unique to a Quadro, and PNY used almost the exact same PCB design on its 9500 GT Core: 4 ROPs, 8 TMUs, 450MHz (3.6 billion texels per second, 1.8 billion pixels per second) RAM: 128 bit DDR3, 700MHz, 22,400MB/s Shader: 2x Unified shader 4.0 (16 units) MADD GFLOPS: 28.8 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

AMD Radeon HD 5450 512 MB - 2009 Here's a beauty, the slowest (and smallest, just 59 mm²) thing that could even be a Terascale2 GPU and still work. If you just wanted to add a DVI port to an older PC or a build without any onboard video, it was worth the £40 or so you'd pay. Like all AMD's Terascale 2, they could take a DVI-HDMI adapter and actually output HDMI signals, so the audio would work. It was up against Nvidia's entirely unattractive GeForce 210 and GT220, which it resoundly annihilated, but nobody was buying any of these for performance. Some of them were supplied with a low profile bracket, you could remove the VGA port (on the cable), and the rear backplane, and replace it with the low profile backplane. This XFX model was supplied with such a bracket. The 'Cedar' GPU was also featured in the FirePro 2250, FirePro 2460 MV, Radeon HD 6350, Radeon HD 7350, R5 210 and R5 220. It was pretty much awful for performance in all of these, but it was meant to be. Nobody bought one of these things expecting a powerful games machine. I dropped it in a secondary machine with a 2.5 GHz Athlon X2, and ran an old benchmark (Aquamark 3) on it. It scored roughly the same as a Radeon 9700 from 2003, despite the shader array being three times faster, the textured pixel rate is very similar, and the memory bandwidth much less. This particular unit uses RAM far below AMD's specification of 800 MHz, instead using common DDR3-1333 chips. In this case, Nanya elixir N2CB51H80AN-CG parts rated for 667 MHz operation, but it clocked them at 533 MHz (1067 MHz DDR). I got about 700 MHz out of them, 715 MHz was too far and my first attempts at 800 MHz (before I knew exactly which RAM parts were in use) were an instant display corruption, Windows 10 TDR loop and eventual crash. Being passive, the GPU couldn't go very far. I had it up to 670 MHz (11.2 GB/s) without any issues, but I doubt it'd make 700 MHz. It finally retired in 2023, after running in the server that powers hattix.co.uk, and it was part of the reason for the retirement. The server had become a CCTV DVR for its heaviest workload, and was running two Xeon E5-2640s, which it never got good use out of. The GPU was not capable of H.264 encoding in hardware, AMD did have encoding acceleration in OpenCL, but this GPU was far incapable of that. The Xeons had no hardware encoding, so had to run software encoding. The decoder would work in hardware, but recording the streams to disk meant re-encoding, and this caused a constant background CPU load on one core. This raised power use... Replacing the whole thing with a Core i5 3570K got Intel QuickSync hardware decoding and encoding. Back to the card, I'm sure XFX would say the RAM was below spec and even underclocked for power reasons, but DDR3 uses practically no power and the RAM chips aren't touching the heatsinks anyway. AMD's official (if confused) specs gave RAM on this card as "400 MHz DDR2 or 800 MHz DDR3". AMD also specifies the bandwidth for DDR3 as 12.8 GB/s, which is matched by 64 bit DDR3-1600. The 8 GB/s of this card is far below that spec. Even the memory's rated DDR3-1333 is still only 10.6 GB/s. Memory hijinks aside, all 5450s ran at 650 MHz, seemingly without exception. What rubs is that Cedar spends 292 million transistors on being around as fast as the 107 million in the R300. It does do more, has quadruple the RAM (if less than a third of the bandwidth), and has Shader Model 5.0, DirectX 11.2 compliance, and three to four times the raw shader throughput, but pixel throughput is around the same, and so is comparable performance. Sample Benchmark Results Unigine Valley (Basic, 1280x720): 226 Core: 4 ROPs, 4 TMUs, 650MHz (2.6 billion texels per second, 2.6 billion pixels per second) RAM: 64 bit DDR3, 533MHz, 8,528MB/s Shader: 1x Unified shader 5.0 (80 units) MADD GFLOPS: 104 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

AMD Radeon HD 5450 512 MB - 2009 Here's a quandry. This is the Dell-branded AMD V218. Install it and it identifies as Radeon HD 5450 and it even went into CrossfireX with the XFX card above. The XFX 5450 above uses a 1108 date code (week 08, 2011) and this one has a 1145 date code. They're both 215-0767003 part codes, corresponds to ATI Cedar. They both clock their GPUs at 650 MHz and both run 512 MB DDR3 SDRAM. Both identify as PCI device 1002-68F9. So, they're identical, right? Not so fast! The XFX runs 533 MHz DDR3, the Dell runs 800 MHz DDR3. A small change, of course, but important when the memory bus is so cramped to begin with. It's a lot more bandwidth, 12.8 GB/s vs 8.5 GB/s. The card also uses the same BIOS as the Dell Radeon HD 6350. We've mentioned how AMD's official spec is 512 MB, 64 bit DDR3 @ 800 MHz, but it's surprising how few cards actually ran this. The Dell one here is bang on, but so many other versions were out there. Asus made a 2 GB model which ran its RAM at 450 MHz, it'd be slower than this one despite four times the RAM. XFX made a "ONE HD 5450" model which ran 400 MHz RAM. There were 400, 450, 533, 650, 667 and 800 MHz variations. You wanted faster, not bigger, so this 512 MB version at 800 MHz was one of the fastest. AMD's high volume OEM parts retained a standard heatsink fitting for many generations. This was wholly and entirely compatible with the heatsink design used on the HD 3450 just above, despite actually coming with a much smaller heatsink (TDP here was 19 watts, a HD 3450 ran 25 watts). So, of course, I swapped 'em. This one spent its entire life running two 50" 720p-ish monitors mounted to a wall in an IT ops office, typically showing SolarWinds displays, which began in around 2012 and went on to 2021 when it was retired and, somewhat unnecessarily, added to the Dell Precision T5600 which was running this server, to partner the XFX Radeon HD 5450 which was already there. After a quick shakedown, it was showing minor graphical corruption, horizontal red lines, and crashed the system. This was likely static damage to the RAM chips, so it went in the Big Box of No Escape. Sample Benchmark Results Unigine Valley (Basic, 1280x720): 254 Core: 4 ROPs, 4 TMUs, 650MHz (2.6 billion texels per second, 2.6 billion pixels per second) RAM: 64 bit DDR3, 800 MHz, 12,800 MB/s Shader: 1x Unified shader 5.0 (80 units) MADD GFLOPS: 104 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

TBC |

AMD Radeon HD 5750 - 2009 AMD's Radeon HD 5700 series rapidly became the mid-range GPUs to rule them. Represented by the Juniper GPU, it sported a teraflops of shader power and over 70GB/s of raw bandwidth. The 5750 promised to be the king of overclockability, being the same Juniper GPU as the 5770, with one shader pipeline disabled and clocked 150 MHz lower, but supplied with an identical heatsink on largely identical PCBs. So it'd produce less power per clock and would overclock further, right? Wrong. AMD artificially limited overclocking on Juniper-PRO (as the 5750 was known) to 850 MHz and even then most cards just wouldn't reach it. 5770s would hit 900 MHz, sometimes even 1 GHz from a stock clock of 850, yet the very same GPUs on the 5750 would barely pass 800 from stock of 700. This one, for example, runs into trouble at 820 MHz. Why? AMD reduced the core voltage for 5750s. Less clock means less voltage required, meaning lower power use but also lower overclocking headroom. 5750s ran very cool, very reliable, but paid the price in their headroom. You could hack the BIOS to restore the voltage scaling, but I never did on either of my cards. Juniper was so successful that AMD rather cheekily renamed them from 5750 and 5770 to 6750 and 6770. No, really, just a pure rename. A slight firmware upgrade enabled BluRay 3D support and HDMI1.4, but any moron could flash a 5750 with a 6750 BIOS and enjoy the "upgrade". Unfortunately there was no way of unlocking the disabled 4 TMUs and shader pipeline on the 5750 to turn it into a 5770, it seems they were physically "fused" off. The Stream Processors were arranged very much like the previous generation, 80 stream processors per pipeline (or "compute engine"), ten pipelines (one disabled in the 5750). Each pipeline has 16 cores, and each "core" is 5 ALUs, so our 5750 has 144 VLIW-5 processor elements. With a slightly downgraded, but more efficient and slightly more highly clocked GPU and slightly more memory bandwidth, the 5750 was that touch faster than a 4850. In places it could trade blows with a 4870 (see above). The 5xxx series really was just a fairly minor update to the earlier GPUs. Video decoding was AMD's UVD2.2, which could handle MPEG-2, MPEG-4 SP, MPEG-4 ASP, and H.264 up to 2K resolution and two streams simultaneously. This card is the Powercolor version, and the PCB is quite flexible. It is able to be configured as R84FH (Radeon HD 5770), R84FM (This 5750) and R83FM (Radeon HD 5670) - Redwood shared the same pinout as Juniper, so was compatible with the same PCBs. It also could be configured with 512 MB or 1 GB video RAM. The 512 MB versions were a touch cheaper, but much less capable. The Powercolor one (red PCB) was bought to add to an XFX I already had, so run in CrossFire. In games which it worked on, CrossFire was extremely powerful, but subject to all the issues a multi-GPU setup had, mostly in frame pacing.

Core: 16 ROPs, 36 TMUs, 700MHz (25.2 billion texels per second, 11.2 billion pixels per second) RAM: 128 bit GDDR5, 1150MHz, 73,600MB/s (GDDR5 is dual ported, so is actually a form of QDR) Shader: 9x Unified shader 5.0 (80 units each) MADD GFLOPS: 1,008 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

AMD Radeon HD 5770 - 2009 The Radeon HD 5770 was about the champion of price effectiveness in 2009 on release, and for much of 2010. A cheaper video card was usually much slower, a faster one was much more expensive. The closely related 5750 was about 5-10% cheaper and 5-10% slower. AMD had planned a "Juniper-LE", to complete the "PRO" (5750) and "XT" (5770) line up, but the smaller, slower and much cheaper Redwood GPU overlapped, so the probable HD 5730 was either never released or very rare. A "Mobility Radeon HD 5730" was released, which was a Redwood equipped version of the Mobility 5770, which used GDDR3 memory instead of GDDR5. Redwood, in its full incarnation, was exactly half a Juniper. Observe:

AMD's VLIW-5 architecture clustered its stream processors in groups of five (this allows it to do a dot-product 3 in one cycle), there are 16 such groups in a "SIMD Engine" or shader pipeline. Juniper has ten such engines. Each engine has four texture mappers attached. Back to the 5770 at hand, when new it was about £130 (January 2011) and by far the apex of the price/performance curve, joined by its 5750 brother which was a tiny bit slower and a tiny bit cheaper. Video decoding was AMD's UVD2.2, which could handle MPEG-2, MPEG-4 SP, MPEG-4 ASP, and H.264 up to 2K resolution and two streams simultaneously. Core: 16 ROPs, 40 TMUs, 850MHz (34 billion texels per second, 13.6 billion pixels per second) RAM: 128 bit GDDR5, 1200MHz, 76,800MB/s (GDDR5 is dual ported, so is actually a form of QDR) Shader: 10x Unified shader 5.0 (10 VLIW-5 of 80, 800 total) MADD GFLOPS: 1,360 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Asus EAH5770 Radeon HD 5770 CUCore - 2010 This mid-sized dual-slot design used a copper cored heatsink among its otherwise traditional radial aluminium extruded fins. The two photos here are one without the cooler and one with it, during the initial cleaning. I wasn't ever expecting to put this back into service, so I didn't really care much about the thermal interface and just dropped some unmelted phase change material back over the GPU with a razor blade. Measuring the cooler's effectiveness was to prove more complex than even that mess would make it! I can tell you the cooler this worked well, but measuring it in-situ was difficult. Let's talk about that. The AMD Evergreen driver you're most likely to use today, a late version of Catalyst 15, or an early Catalyst 16, had a very interesting bug in it. The GPU here is used mostly for its H.264 decoding ability, as the server also handles home DVR for security cameras (never farm off to a giant global megacorporation that which you can do at home) so needs to decode video streams. That, and this was the most powerful GPU I had sitting spare in the box of parts. So when the GPU is in video decoding mode, AMD applies a power profile called "UVD" after "Unified Video Decoder". This is a GPU clock of 400 MHz and RAM clock of 900 MT/s (450 MHz DDR). No matter what else you then do on the GPU, you will be in the UVD power profile! Bad when you're trying to measure its performance or maybe do some OpenCL code while the UVD is active! A fix which works on some driver versions (the permanent fix came after Evergreen went out of support) is to find HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Class\{4d36e968-e325-11ce-bfc1-08002be10318} in the registry, find the correct display adapter under that (it will usually be 0000, but may not be) and add a DWORD of "KMD_MaxUVDSessions" with a value of 20 hex or 32 dec. This seems to force the UVD manager into multisession mode, where clocks are managed differently. On driver version Crimson 16.1.1 Beta, this did not fix the issue. I initially figured my abuse of the thermal interface was the cause of a substantial problem: In Furmark, it hit 105C and plain stayed there, throttling back between 850 MHz and 600 MHz. I did a proper re-paste job on it to make sure that wasn't the problem. Indeed, it was not the problem. The problem was one most mid-range GPUs of that era had: They were small to medium dies (166 mm²) running at pretty high power, so were difficult to cool. This particular card had a TDP of 108 watts, but seemed to run at 125 watts under a very heavy load. They weren't power limited, they were "VDCC" limited, which was a limit on the current from the VRM. Under Furmark, it'd reach around 80% of maximum allowed... This implied the GPU could probably hit 150 watts if it were cooled well enough. So I fixed it with Bernoulli's principle. The card as supplied has a shroud around the fan and over the heatsink, removing this allows Bernoulli to work for us. When we blow air, we make a small pressure wave in front of us as that air moves other air out of its way, so also leaves a slight reduction in pressure behind it. This means we pull in a lot more air we haven't actually blown, if that air can be affected, but the shroud here stops that happening and hugely limits airflow. Removing it allows airflow to be increased by (sometimes) a factor of ten to fifteen. It's a huge effect. It brought steady-state Furmark down from 105C to 88C. This effect is also why a fan stood off from whatever it's blowing air at and (ideally) without a duct or frame will blow a lot more air than one in a duct or flush against the surface. Juniper was AMD's mainstream of this generation, with most video cards launching from October 2009 to June 2010. This hit retail in the first week of June 2010. In the UK, it would have been about £150, or £220 in January 2024 money, per the Bank of England Inflation Calculator

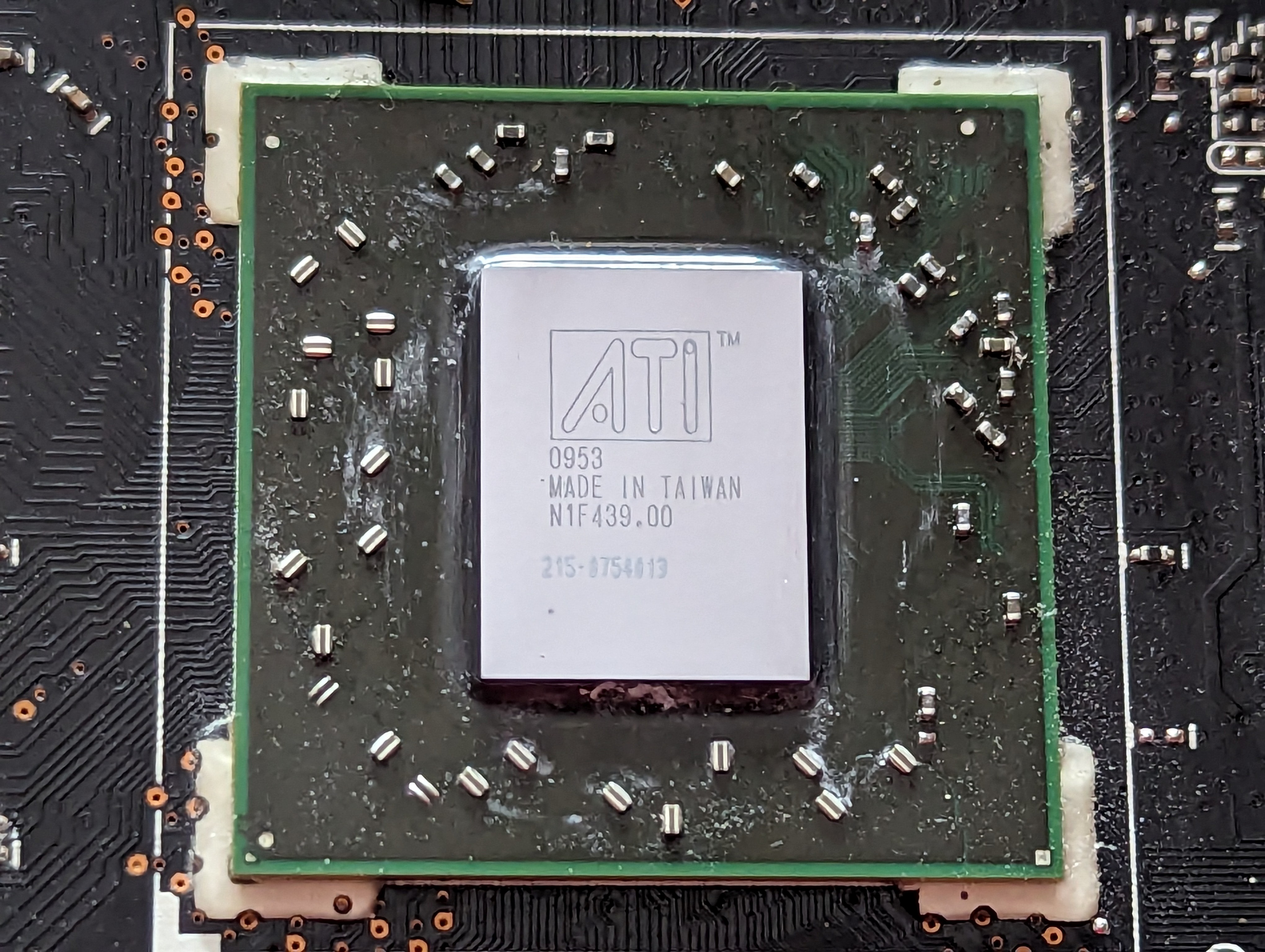

The "0953" date code shows this was made in week 53 of 2009, so probably December 30th or 31st. Now this was one step below the Radeon HD 5850, so was the upper-midrange product, roughly equivalent to the RX 7800XT or RTX 4070 Ti today. You won't find one of those at £220! As Moore's Law and Dennard Scaling died in around 2016-2019, the cost of silicon has risen enormously and prices have tripled and quadrupled to match. While AMD offered 512 MB and 1 GB VRAM options for the 5750, the 5770 was only ever officially available with 1 GB (though it could easily be designed with 512 MB). Asus used Samsung K4G10325FE-HC04 GDDR5 memory, and it's cool to decode Samsung model numbers here, so let's do that! The Hattix Samsung Memory Model Decoder The number refers to how far along the model number we are. 1 - Always K = "Samsung" 2 - 4 = "DRAM" 3 - G = "GDDR5". There's also N for graphics-intended DDR2, W for graphics-intended DDR3, and J for GDDR3 4+5 - 10 = "1G, 8K/32ms". Density and refresh. The RAM is 1 Gbit in size, and each 8K row needs refreshing every 32 ms 6+7 - 32 = "x32" DRAM organization, so this was 32 bits wide. Common widths for GDDR5 of this era were 16 and 32 8 - 5 = "16 banks" The number of banks. Other values were "4" for 8 banks, or "3" for 4 banks 9 - F = "POD_15" This is the interface, which is either POD or SSTL. POD_15 means we want 1.5V POD signalling 10 - E = "Revision", or revision 6. Samsung later expanded this as "generations" and a higher revision is not always better. Rev1 is "M" and usually qualification. Revision 2 is "A" and initial mass production. Revision 3, "B", is high performance bins. Later revisions than that usually focus on lower power or lower cost 11 - H = "170FBGA Halogen and Lead free" - the package type. GDDR5 of this generation was only available in this package 12 - C = "Commercial Normal" this is the operating conditions. This refers to temperature and power. Other options are "low" and "high", although Samsung offers segments which aren't commercial, such as radiation hardened and military, they're not usually on public spec sheets 13-14 - 04 = "0.40 ns" this is the cycle time, corresponding to a per-pin bitrate of 5.0 Gbps All this means we have four DRAMs, each 32 bits wide and 1 Gb in capacity, rated to a per-pin 5.0 Gbps bitrate. Compicating matters is that the DRAMs here are stacked, so there's another four on the back, analogous to having two DIMMs on one channel, only one can be used at any one time. So, if we ran this flat out, as seen, we have a 128 bit bus (32 x 4), each bit running at 5.0 Gbps, for a total bitrate of 640 Gbps or 80 GB/s. From our transfer rate of 1,200 MT/s (a 600 MHz clock) we calculate 76.8 GB/s, meaning this RAM is very slightly underclocked - It is rated for 1,250 MT/s. This can be explained in many ways, maybe Asus really did underclock the RAM, but more likely a 1,200 MT/s signal rate didn't neatly fit into the rating of any widely available GDDR5, and AMD/Asus wanted the headroom. Running RAM slightly slower than its maximum signalling rate means latencies can be adjusted to suit. I haven't pulled apart the BIOS to check latencies, but wouldn't be surprised to see the CAS latency set appropriately for running 1.25 GT/s memory at 1.20 GT/s. By modern standards, the Juniper-XT GPU is hopelessly outdated, even in OpenCL tasks. It has no FP64 support at all, and a peak FP32 GFLOPS of 1,360, which is usually measured to around 1,100 in benchmarks. For context, an RX 570 will usually get to around 5,200 GFLOPS, an RTX 2070 will top 9,000 and... well... even CPUs can keep up. The Xeon E5-2690 v4 (from 2015) it is being used with benchmarks to 710 GFLOPS FP32, and a completely modest Ryzen 5 5600X hits 906. This is using AIDA64's GPGPU benchmark for Single-Precision FLOPS. It isn't a rigorous test, but it is a comparable one. BIOS on these cards wasn't encrypted or signed at all, so you could load anything, including modified BIOS images. There was a simple checksum, which was there just to ensure proper programming of a new BIOS. From the factory, this had BIOS version 12.15 (with a compile date of 14th Jan 2010). It had the standard GPU and RAM clocks (850/1200), limits of 1200 MHz GPU and 1445 MT/s RAM (which no 5770 was ever going to hit), a max temperature of 109C and completely sensible performance profiles. Soft-modding was not possible on Juniper, so a 5750 could not have the additional two units enabled to turn it into a 5770. As this GPU is actually in use as of 2024, I'll get some benchmark results here at some point. That won't end embarrassingly. At least, with a Xeon E5-2490v4, we're definitely not going to be CPU limited! Core: 16 ROPs, 40 TMUs, 850MHz (34 billion texels per second, 13.6 billion pixels per second) RAM: 128 bit GDDR5, 1200MHz, 76,800MB/s Shader: 10x Unified shader 5.0 (10 VLIW-5, each VLIW-5 has 80 elements, 800 total) MADD GFLOPS: 1,360 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

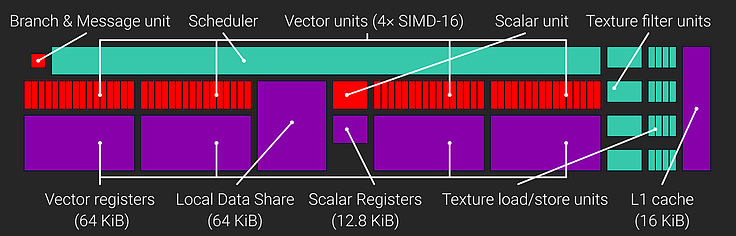

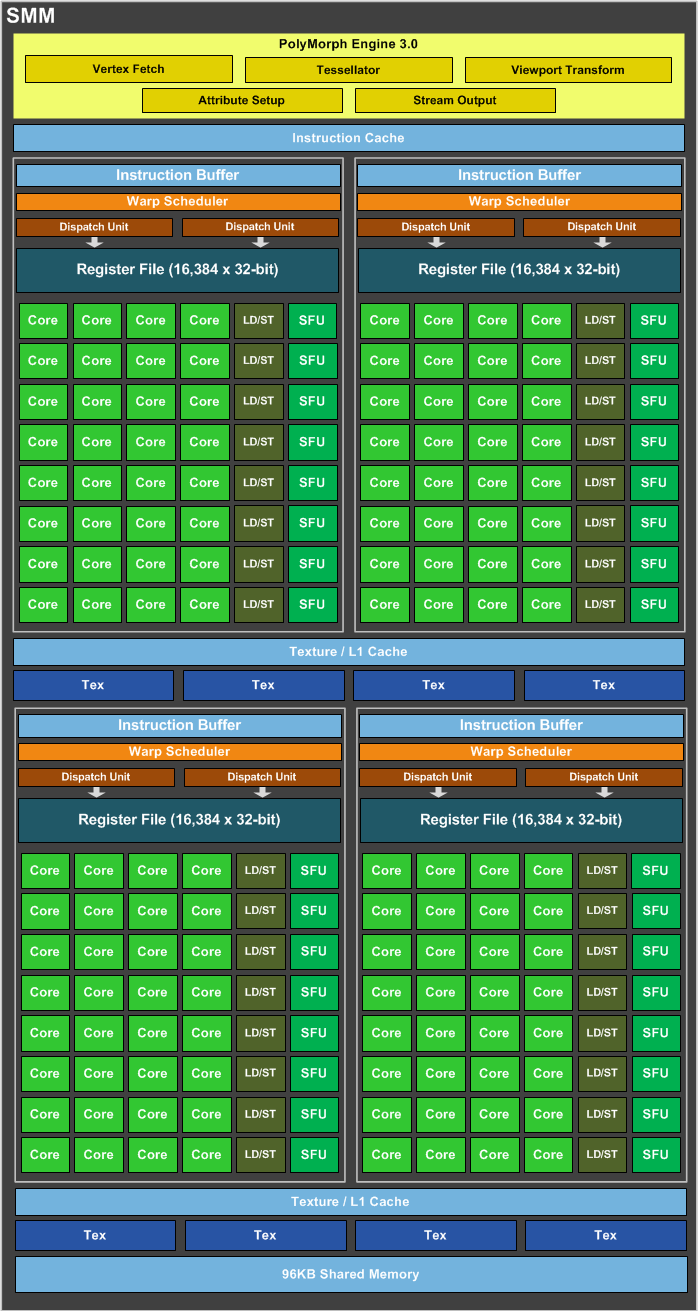

AMD GCN The world came to a crashing halt when AMD introduced GCN. AMD's previous designs, all the way back to R520 it inherited from ATi, were VLIW-5 (with a late and minor detour to VLIW-4). "VLIW" is "Very Long Instruction Word", meaning an instruction word is a package of instruction and data. VLIW in this case is five units wide, so a VLIW-5 operation on, say, TeraScale 2, can do five FP32 operations: But has to do them all at once, and there are restrictions as to what instructions can be used together. VLIW-5 was chosen as it could single-cycle a dot-product3 lighting calculation, but real games generally ran instructions four wide, not five, so TeraScale 2's immense peak compute performance was not backed up by similarly immense game performance. Indeed, loading up TeraScale 2 with intensive operations which used the VLIW-5 design optimally would usually cause it to thermally throttle. AMD did rework TeraScale 3 to VLIW-4 in Cayman and the first APU generation (e.g. Llano, Richland), which helped efficiency somewhat. GCN is very different, it is a SIMD-16 design at core. Each GCN Compute Engine contains up to sixteen Compute Units, the diagram of a Compute Unit is shown below.  So these are four SIMD-16 units in parallel, meaning they can do four instructions at once, each instruction on sixteen FP32 values. A Wave64 wavefront presented to a CU an be viewed as a 4x64 array, where wavefronts 0 to 3 are being executed at any one time. It's best to view it clock by clock: 0. Wavefronts are dispatched. 1. Instruction 1 loads 16 values each from wavefronts 0 to 3 into all four SIMD-16s 2. Instruction 1 is completed, Instruction 2 loads 16 values from each wavefront... 3. Instruction 2 is completed, Instruction 3 loads 16 values from each wavefront... 4. Instruction 3 is completed, Instruction 4 loads 16 values from each wavefront... 5. Repeat from step 1 with newly dispatched wavefronts A GCN compute unit therefore works on four wavefronts at once, and can issue new wavefronts every four cycles. Unfortunately, if we only have one wavefront, we still block the entire four as we can only load a new one every four cycles. This means in games GCN tended to be under-utilised. Over time developers did learn to work better with GCN - They had to: GCN won the console. It was the GPU architecture of the Xbox One and PlayStation 4. If a game engine ran badly on GCN, it was a has-been engine, so there was an incredible rush by engine developers to get the best out of GCN. On release, a Radeon HD 7970 was as much as 20% slower than a GeForce GTX 680 in unfavourable cases, and in general around competitive across the board. Four years later, the GCN-based 7970 was as much as 40% faster than the GTX 680 in contemporary workloads. Games better learned how to handle GCN's one-in-four wavefront dispatch. GCN also, which wasn't exposed to begin with, had a really cool feature called asynchronous compute. This allowed compute tasks to be "slotted into" spare wavefronts. GCN had a lot of spare wavefronts! This meant AMD was aware of GCN's wavefront issue problem from the get-go, and had hardware to alleviate it. The asynchronous compute engine as finally enabled to generic software in DirectX 12. Nvidia also supported asynchronous compute, but emulated it in software. There was some benefit on the Green Team, but not as much. Nvidia primarily has less wasted capacity in a wavefront anyway. GCN initially had poor tessellation throughput. Games barely used it, so one tessellator was fine for first generation GCN (GFX6). Because this was an AMD weakness, Nvidia pushed tessellation heavily in its GameWorks middleware! In some cases, tessellation was so extreme that 32 polygons were under each pixel, dramatically slowing performance for no additional image accuracy at all. GCN's tessellation issues were mostly worked out in GFX7, Hawaii and Bonaire. Hawaii doubled the tessellators. The next enhancement to GCN was GFX8, Fiji and Tonga. Polaris (Ellesmere, Baffin and Leka) was also GFX8 level. AMD claimed a "color compression" boost, but this is GPU engineering parlance for anything which causes more effective utilisation of memory bandwidth. Larger L2s and CU prefectching added most of GFX8's advantage, where it had advantage at all. The peak of GCN was "NCE", used in Vega. Vega shipped quite badly broken, the Primitive Shader (combines vertex and pixel data for dispatch) was either broken or didn't add any performance. Vega's CU occupancy in even highly optimised games was around 50-70%, almost criminally bad, and this was the best of GCN. The Drawstream Binning Rasteriser also appeared to be non-functional, but it almost certainly did work. Vega managed a substantial improvement over Fiji, even clock corrected, despite having much less raw memory bandwidth. Like Maxwell, Vega made better use of the on-chip storage and, like Maxwell, the tile based dynamic rendering shows most benefit in bandwidth constrained situations. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

AMD Radeon HD 7970 - 2011 AMD's Graphics Core Next was originally a codename for what was coming after VLIW-4 (Cayman, seen in the HD 6970), the instruction set was to change from VLIW to SIMD. Each GCN "block" consists of four vector ALUs (SIMD-16) and a simple scalar unit. Each SIMD-16 unit can do 16 MADDs or FMAs per clock, so 128 operations per clock for the whole thing. The texture fetch, Z and sample units are unchanged from Terascale2/Evergreen, there are 16 texture fetch, load/store units and four texture filter units per each GCN "compute unit". AMD's Radeon HD 6000 generation had been disappointing, with rehashes of previous 5000 series GPUs in Juniper (HD 5750 and 5770 were directly renamed to 6750 and 6770) while the replacement for Redwood, Evergreen's 5 CU part, was Turks, a 6 CU part. It seemed a bit pointless. The high-end was Barts, which was actually smaller and slower than Cypress. Only the very high end, Cayman, which was a different architecture (VLIW-4 vs VLIW-5), was any highlight. AMD pulled this same stunt some years later with Polaris: If it doesn't feel it can compete at the high end, it doesn't waste resources on it. TeraScale 3 (VLIW-4, Cayman) wasn't quite ready, and Cypress wasn't enough to go up against Fermi-B, so Barts definitely wasn't. This meant AMD aimed at a solid mid-range and left the high end to Nvidia. Anyway, back to the 7970. On release, the HD 7970 was as much as 40% faster than Cayman. Such a generational improvement was almost unheard of, with 10-20% being more normal. Tahiti, the GPU in the 7970, was phenomenally powerful. Even Pitcairn, the mainstream performance GPU, was faster than everything but the very highest end of the previous generation. Over time, as games and drivers matured, Tahiti gained more and more performance. On release it was of similar performance to the GeForce GTX 680, but a few years later it was running much faster. It kept pace so well that, eventually, its 3 GB RAM became the limiting factor! Tahiti was one of those rare GPUs which takes everything else and plain beats it. It was big in every way, fast in every way, and extremely performant in every way. Notably, its double-precision floating point performance was 1/4, meaning it hit almost 1 TFLOPS of DP performance. That was still at the high end of things in 2016. UVD in Tahiti was version 3.2, which could handle the entropy decoding part of a MPEG-2 or MPEG-4 SP/ASP bitstream, so could take the unpacked bitstream from the CPU and handle the rest of it itself. H.264 was, of course, fully handled, as was VC-1 The Radeon HD 7970 was the first full implementation of the "Tahiti" GPU, which had 32 GCN compute units, organised in four clusters, clocking in at 925 MHz. This put it well ahead of Nvidia's competing Kepler architecture most of the time. An enhanced "GHz Edition" was released briefly with a 1000 MHz GPU clock (not that most 7970s wouldn't hit that), which was then renamed to R9 280X. At that point, only the R9 290 and R9 290X, which used the 44 units of AMD's "Hawaii", a year later, was any faster. This card eventually died an undignified death, beginning with hangs when under stress, then failing completely. As it was on a flaky motherboard (RAM issues), I assumed the motherboard had died, and replaced it with a spare Dell I got from the junk pile at work (Dell Optiplex 790). This video card couldn't fit that motherboard due to SATA port placement, only when a PCIe SATA controller arrived did the GPU's failure become apparent. It was likely an issue on the video card's power converters and the Tahiti GPU remains fully working on a PCB unable to properly power it. One last note on these. When cryptocurrency first became a thing, Tahiti was by far the most effective way of mining it. In 2012, you could mine Bitcoin on this GPU and run at a profit, even at the very low prices of Bitcoin back then. If you did it for, say, six months in 2012, you'd have probably ended up with two or three entire Bitcoins. The Scrypt based coins in 2013, intended to be too memory intensive for ASIC miners, could give you $10 (USD) per day in profit. Tahiti's enormous (for the time) memory bandwidth also lent it well for mining ZCash (it would do about 280-290 hashes per second), as ZCash was heavily memory bandwidth reliant. The 264 GB/s of Tahiti was above everything else which was widely available. Hawaii (R9 290/290X and their 300-series renames) was faster, but also much, much more expensive and harder to obtain. Fiji was also faster, but also very expensive and hard to buy. For cryptomining, Nvidia had nothing in that day. GTX 680 ran at 3409 GFLOPS and had 192 GB/s bandwidth, for example. Core: 32 ROPs, 128 TMUs, 925 MHz RAM: 384 bit GDDR5, 1375 MHz, 264,000MB/s (GDDR5 is dual ported, so is actually a form of QDR) Shader: 32x Unified shader 6.5 (32 GCN blocks in 4x8 configuration - 2048 individual shaders) MADD GFLOPS: 3,790 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Zotac GeForce GT620 1GB DDR3 - 2012

This bears a sticker on the rear telling us it was manufactured in 2014, at which point the GF119 GPU was three years old. It has debuted as a low end part of the GeForce 500 series, in GeForce 510 and GeForce 520. The GeForce 620 retail normally used the GF108 GPU (even older, and first appeared in the GeForce 430), but OEM parts were GF119. This was a relabel of the GeForce 520 and used to meet OEM lifetime requirements. The provenance of this particular card is not well known: It arrived non-functional in an off-the-shelf PC which had onboard video (GeForce 7100) as part of its nForce 630i chipset, so clearly was not part of that PC when it shipped. It used the old Fermi architecture and only one compute unit of it, giving it just 48 CUDA cores. The GPU clock ran at 810 MHz (the CUDA cores in Tesla and Fermi were double-pumped) and DDR3 ran at 900 MHz over a 64 bit bus, all reference specs, as this card doesn't show up on the bus. In a system which could keep the IGP running with a video card present, the GT620 actually appeared on the bus and could be queried. It turned out to be a Zotac card with an 810 MHz GPU clock and 700 MHz DDR3 clock. No display output was functional of the HDMI and DVI present. The header for a VGA output was fitted, but the actual port was not. In later testing, the GT620 was found to be fully functional. Most likely some manner of incompatibility with BIOS or bad BIOS IGP settings caused it. The system it was in had lost its CMOS config due to a failed motherboard battery. The GeForce GT620 was very cheap, very low end, and very slow. It would handle basic games at low resolutions, such as 1280x720, but details had to be kept in check. In tests, it was about as fast as a Core i5 3570K's IGP and around 30% better than the Core i5 3470's lesser IGP. Given they were contemporaries, one wondered exactly who Nvidia was selling the GeForce GT620 to. The GeForce GT520's life did not end with the GT620. It had one more outing as the GeForce GT705, clocked up a little to 873 MHz. Its contemporary in the bargain basement was AMD's Radeon HD 5450 and its many relabels (6350, 7350, R5 220), which it more or less equal to. Core: 4 ROPs, 8 TMUs, 810 MHz RAM: 64 bit DDR3, 700 MHz, 11.2GB/s Shader: 1x Unified shader 5.1 (1 Fermi block - 48 individual shaders) MADD GFLOPS: 155.5 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Asus GT640 2 GB DDR3 - 2012

This large, imposing thing is actually Asus' GeForce GT640. What could possibly need such a large cooler? Not the GT640, that's for sure. The DDR3 version used Nvidia's GK107 GPU with two Kepler units, for 384 cores, but also 16 ROPs. The DDR3 held it back substantially, with the RAM clock at 854 MHz, the 128 bit bus could only deliver 28.5 GB/s. The GPU itself ran at 901 MHz on this card. Asus ran the RAM a little slower than usual, which was 892 MHz for most GT640s. GK107 was also used in the GTX 650, which ran the GPU at 1058 MHz, 20% faster, and used GDDR5 memory to give 3.5x the memory performance of the GT640. It was around 30% faster in the real world. It, along with the surprisingly effective (but limited availability) GTX645, was the highlight of Nvidia's mid-range. The GT640, however, was not. GT640 was among the fastest of Nvidia's entry level "GT" series and did a perfectly passable job. Rear connectors were HDMI, 2x DVI and VGA. It could use all four at once. At the entry level, performance slides off much quicker than retail price does, and while GT640 was near the top of it, it was still much less cost-effective than GT650 was. The very top, GTX680, was also very cost-ineffective. The low end and high end of any generation typically have similar cost to performance ratios, the low end because performance tanks for little savings, and the high end because performance inches up for a large extra cost. RAM: 128 bit DDR3, 854 MHz, 27,328 MB/s Shader: 2x Unified shader 6.4 (2 Kepler blocks - 384 individual shaders) MADD GFLOPS: 691.2 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

EVGA GeForce GTX 680 SuperClocked Signature 2 GB - 2012 The GTX 680 was the Kepler architecture's introductory flagship. It used the GK104 GPU, which had eight Kepler SMX units (each unit had 192 ALUs, or "CUDA cores"), each SMX having 16 TMUs attached and the whole thing having 32 ROPs. Memory controllers were tied to ROPs, each cluster of four ROPs having a 32-bit link to a crossbar shared among four ROPs, so each crossbar memory controller, which served 8 ROPs, had a 32 bit memory channel to RAM. With 32 ROPs, GTX 680's GK104 had 256 bit wide memory. Kepler appeared to have been taken by surprise by AMD's GCN, but just about managed to keep up. As games progressed, however, GK104's performance against the neck-and-neck Radeon HD 7970 began to suffer. In more modern titles, the Tahiti GPU can be between 15 and 30% faster. Nvidia's Kepler line-up was less rational than AMD's GCN or TeraScale 2, but still covered most of the market: GK107 had 2 units GK106 had 5 units GK104 had 8 units Nvidia disabled units to make the single unit GK107 in GT630 DDR3 and the three unit GK106 in GTX645. The second generation of Kepler, in (some) Geforce 700s added GK110 with 15 units, Nvidia pulled out all the stops to take on GCN, and more or less succeeded. There was also a "third" generation to Kepler, though this was really the second generation, it was the same feature level as GK110. GK208 was a tiny little thing, it had 2 SMX and was an almost like-for-like replacement of GK107. It cut off the NVENC video encoder and raised CUDA capability to 3.5, but also repackaged the ASIC from a 908 pin BGA to a 595 pin BGA, by cutting off half the memory width, it was reduced from 128 bits to 64 bits. GK208 was the most cut down thing which could even call itself Kepler: The smallest a Raster Engine could be was two SMXs! GK210, however, was something special. It was a third generation to Kepler, had 15 SMXs, raised CUDA to 3.7 and had a 384 bit memory bus. The five GPCs of GK210 (each with three SMXs) meant it could output 8x5 = 40 pixels per clock, but had 48 ROPs, so eight of the ROPS were always going to be underutilised, just like in GK110, which it was a fairly minor revision of. GK210 was only exclusively used in the Tesla K80, though it may have been used in some GTX TITAN and GTX 780 Ti cards. They were pin-compatible in BGA-2152 format. We're getting ahead of ourselves. GTX 680 was released into a world where AMD's Tahiti, as Radeon HD 7970, was owning everything, in everything. How did GTX 680 fare? Surprisingly well. Kepler was designed as the ultimate DirectX 11 machine and it lived up to this... These days, however, by showing how badly it has aged. While the 7970 kept up with modern games - it was still quite potent well into 2020, limited mostly by its 3 GB RAM, the GTX 680 tended not to maintain its place in the lineup. The newer the game, the more the 7970 beats the GTX 680 by, even if we don't hit the limits of 2 GB. Sample Benchmark Results Luxmark 3.1 (Hotel): 928 Unigine Valley (Basic, 1280x720): 3,450 Unigine Heaven (Extreme, 1600x900): 1,227 Unigine Heaven (Extreme HD, 1920x1080): 1,910 Unigine Superposition (1080p Medium): 6,118 Core: 32 ROPs, 128 TMUs, 1150 MHz RAM: 256 bit GDDR5, 1552 MHz, 198,656MB/s Shader: 8x Unified shader 6.4 (8 Kepler SMX - 1536 individual shaders) MADD GFLOPS: 3,532 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Nvidia Quadro K2000 2 GB GDDR5 - 2013 The Quadro K2000 was Nvidia's "mainstream" professional video card at $599 on launch in 2013. It is based around the Nvidia GK107 GPU, which has three Kepler blocks on it, each of which contains 192 CUDA cores (256 functional units including the very limited-use special function unit), giving 384 shader units on this GPU. Practically, it's a GeForce GTX 650 with double the memory and lower clocks. The memory on this professional card is not ECC protected and provided by commodity SK Hynix, part H5GQ2GH24AFR-R0C. Most GTX 650s (and the GT740 / GT745 based on it) used double slot coolers, while this uses a single slot design. The GTX 645 used almost the exact same PCB layout as the Quadro K2000, but the more capable GK106 GPU. The GTX 650 used a slightly different PCB, but also needed an additional power cable. Nvidia put Kepler's enhanced power management to good use on the K2000, and, in testing, it was found to throttle back quite rapidly when running tight CUDA code, the kind of thing a Quadro is intended to do. When processing 1.2 GBs worth of data through a CUDA FFT algorithm, the card had clocked back as far as 840 MHz, losing over 10% of its performance. It stayed within its somewhat anaemic 51 watt power budget and reached only 74C temperature. Professionals wanting more performance than a $150 gaming GPU should have probably bought a GTX 680 a few months earlier with the money, and had enough left over to get some pizzas in for the office. Professionals wanting certified drivers for Bentley or Autodesk products should note that both AMD and Nvidia's mainstream cards and drivers are certified. This came out of a Dell Precision T5600 workstation, where video was handled by two Quadro K2000s (GTX 650 alike, $599) to give similar performance to a single Quadro K4000 (sub-GTX 660 $1,269). By the time it arrived here, one of the K2000s was missing and the remaining one was quickly donated to a friend. The K4000 was probably the better choice, but that's not what we're here for. Core: 16 ROPs, 32 TMUs, 954 MHz RAM: 128 bit GDDR5, 1000 MHz, 64,000 MB/s Shader: 3x Unified Shader 6.4 (3 Kepler SMX - 384 individual shaders) MADD GFLOPS: 732.7 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

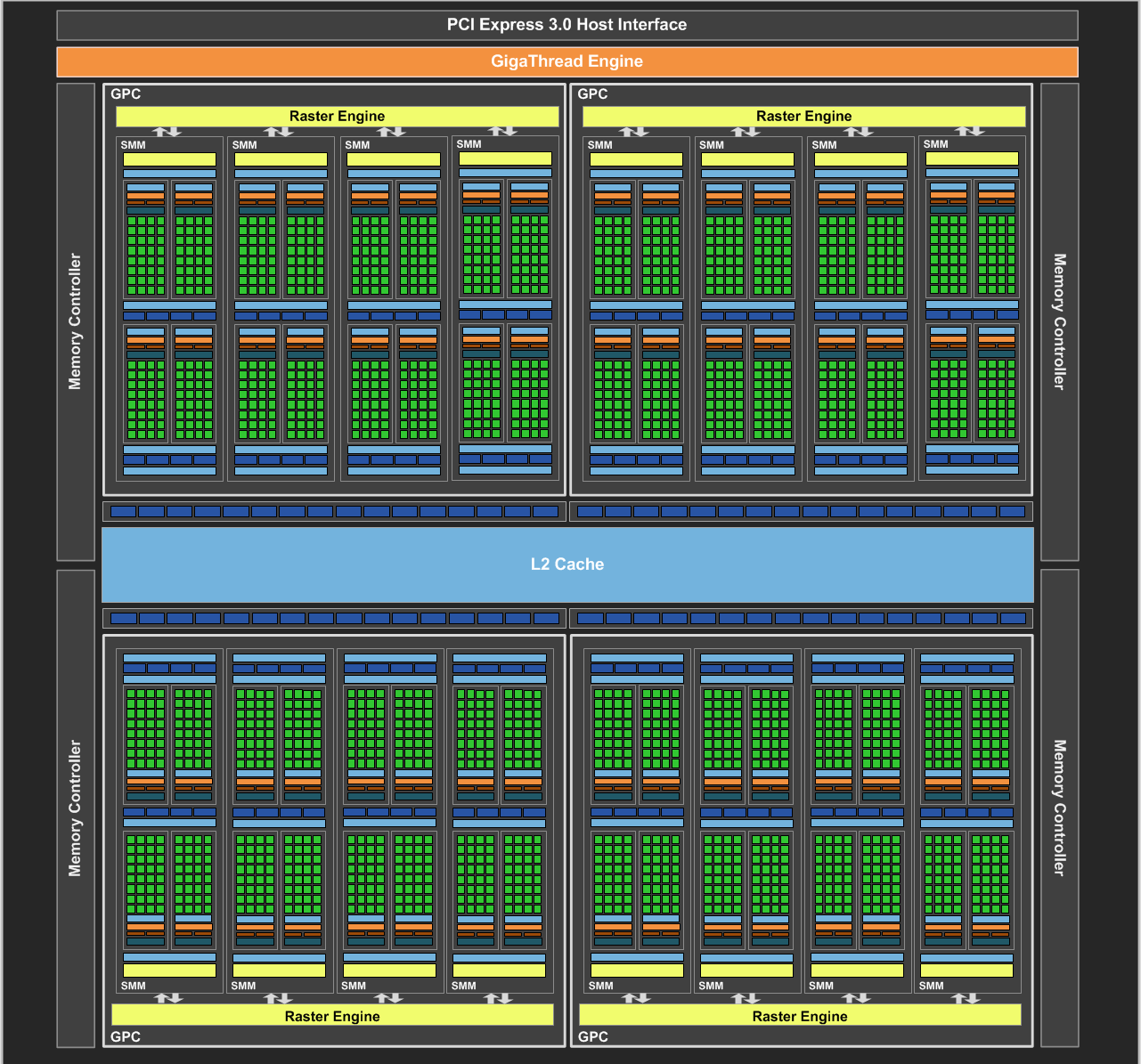

MSI GTX 970 Gaming 4 GB - 2014 "Gaming" for the 900 generation was MSI's mainstream, there's really nothing special here. Clocks are up a little compared to reference, but not enough to be worth talking about. Power in is PCIe 8 pin and PCIe 6 pin, it will not work with two six pins (even if they are sensed for 125 watts each). MSI sets a power target of 200 watts and a limit of 220 watts in BIOS. This higher power limit wasn't unusual for a GTX 970. In my testing, the GPU didn't go much above 150 watts. MSI's "Twin Frozr" cooler comes from the time when anything ending in "-r" lost its last vowel (Twitter was originally Twittr, Tumblr, Flickr, etc.) and is a very competent cooler, five heatpipes do a laudable job of moving heat from the large GM204 die. VRMs are 6 phases for the GPU and two double-output phases for the RAM controlled by a common OnSemi NCP81174 PWM controller, also used by the GTX 980s (at least reference designs) which was designed for low cost, so doesn't have an I²C interface for monitoring of power delivery. Memory is Samsung K4G51325FC-HC28, so GDDR5, 512 Mbit, 32 bits, 16 banks, 0.28 ns cycle time (7.1 Gbps per pin). The GPU is the GM204, the revised Maxwell, and made on TSMC's 28HPM process, it throws 5.2 billion features in its 398 mm² area.

The top level organisation of GM204 is the Graphic Processing Cluster (GPC), just like Kepler. Each GPC has four streaming multiprocessors (SMs), and each SM contains four execution units, each eight-wide, and each having their own instruction buffer, warp scheduler, and dual-dispatch units. A single execution lane is SIMD-128 and made of four FP32 "cores". The four FP32 cores are joined by a load/store unit and a special function unit on each lane. Now we have a basic understanding of GM204's organisation, we know it has 16 total SMMs. A logical way to partition this is to disable a single GPC, dropping it to 12 SMMs... Nvidia instead chose to drop it to 13 SMMs. In itself, this is not a problem, and would not be a problem. What else Nvidia did, possibly driven by Maxwell's architecture, possibly a completely brainless decision, was cut the ROPs (render output pipelines) from 64 to 56. Each block of 8 ROPs is linked to its own segment of L2 cache. In GM204's specs, Nvidia was very misleading about this, and it took microbenchmarking to reveal GM204's real architecture. We'll talk about the real architecture, not Nvidia's marketing, exclusively. Nvidia said GTX 970 had four 64 bit memory controllers. It does not, it has eight 32 bit memory controllers. This is important, keep it in mind! Each memory controller is hosted by a 256 kB L2 cache. The L2 cache is linked up to a coherency crossbar. This crossbar keeps track of what's in L2 cache so each functional unit doesn't try going out to RAM to access data already cached, and memory changed by one unit can be referenced by another without the "old" data in RAM being touched. Cache coherency is well documented across the Internet, we won't rehash it all here, so the L2 TLBs (transaction look-aside buffers) are in the crossbar. Nvidia disabled one of the 256 kB L2 segments in GTX 970. From what we know already, this means that the memory controller attached to that L2 segment is uncached. This means it is non-coherent. That is, if anything accesses memory on that controller all other memory accesses have to stop. Nvidia's SVP of GPU Engineering, Jonah Alben, relased a diagram showing this, but this was also deceptive. It showed the last memory controller being a client of the L2 cache of the memory controller next to it. This was a lie! This memory controller was not a client of anyL2 cache, it went straight to the crossbar. While the data path did go through the L2 cache, it wasn't a client of it, it bypassed L2 and it is better to show it going directly to the crossbar. Alben also stated memory bandwidth remained at 224 GB/s, as it can be reached when "memory is accessed in both pools". This was also incredibly deceptive, in fact, it was an outright lie. When the uncached memory was being accessed, every other memory channel was blocked to maintain coherency. If coherency was breached, the GPU would no longer know what was in memory anywhere and would have no choice but to halt all execution - it would crash. For a machine which can run, at peak, 272 hardware threads, having the entire of memory held up by a single 32 bit uncached non-coherent memory channel is absolutely unacceptable. It would completely destroy performance, the GTX 970 would run slower than a GTX 960. This was, of course, unacceptable. So Nvidia's drivers simply didn't use that memory. If you loaded up a demanding game using DX10 or DX11 virtualised video memory, you would see around 3,500 MB in use. Nvidia was still releasing drivers with "coherency updates" some ten months after GTX 970's launch. These were thought to be hints to the driver on when it was and wasn't safe to use the non-coherent memory. My speculation is that, not long before release, Nvidia realised GTX 970 was far too close to GTX 980 (which cost 40% more) and it was in danger of making the GTX 980 irrelevant, so a last minute decision to cut back the L2 cache was made, which didn't have time to be properly accounted for. This became a massive controversy at the time. Nvidia had lied about ROP count in its guidance to reviewers (suggesting this was a last-minute decision, or someone didn't understand what Maxwell's architecture would mandate if a segment of L2 cache was disabled), lied about memory performance, doubled down on both lies, then partially came clean, but included two more lies while doing so. Finally, about eight months after launch, Nvidia admitted the 512 MB memory segment on the GTX 970 was uncached and, therefore, non-coherent. Why not come clean immediately? To do so would have resulted in everyone referring to it as a 3.5 GB product. Non-coherent memory might as well not exist, using it severely harms performance. In the end drivers in DX 11 and earlier would use it as a buffer before evicting pages to system RAM, it was a kind of L3 victim cache. In DX 12, drivers plain would not use it at all unless they absolutely had to. What a mess! Anyway, the difference between 3.5 GB and 4 GB in 2014 was almost nothing. People were more upset about the lies and Nvidia's really hamfisted way of responding to it. Its performance for its price was very competitive, slightly edging out the previous generation's massive GTX 780 Ti in some tests, and being edged out by it in others. AMD's equally huge R9 290X was usually lagging by quite a lot. In intensive games, it could double the performance of the GTX 680 which this GTX 970 replaced... in 2025! The son's gaming PC was due a rebuild and he's not terribly demanding, so the first component was this GTX 970. It was only £55, so an impulse buy, even if that was a bit steep. Raw specs aren't much over the GTX 680. In some cases it even lags. Sample Benchmark Results Unigine Heaven (Extreme, 1600x900): 1,773 Unigine Valley (Extreme HD, 1920x1080): 2,292 Unigine Superposition (1080p Medium): 7,663 Unigine Superposition (1080p High): 5,518 Oh. Yeah. That's what Maxwell did. Maxwell was able to use dynamic sized tiles when rendering to fit as much as possible into the GPU's caches (which had increased a lot), it also resized the basic warp dispatch to achieve higher core occupancy. The basic configuration of Maxwell became the basis for all subsequent Nvidia GPUs, in either 128 cores per SMM or 64 cores per SMM configuration. Maxwell was Nvidia's "GCN moment" and Nvidia wouldn't make this level of change to its architecture until Ampere and Ada, even then the changes were over two generations. Core: 56 ROPs, 104 TMUs, 1178 MHz RAM: 224 bit GDDR5, 1753 MHz, 196,224 MB/s (No, we're not including the non-coherent partition) Shader: 13x Unified shader 6.7 (13 Maxwell SMM - 1,664 individual shaders) MADD GFLOPS: 3,920 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Asus Strix GTX 980 DirectCI II OC - 2014 In 2025, the son's GTX 680 was overdue an update, though he didn't mind it. I found an impulse-buy GTX 970 at £55 so just bought that for him. It's the MSI Gaming one just above. Then some legend on Reddit offered me this thing for free not even a month later. Fair enough. He wouldn't even accept the cost of shipping. GTX 980 wasn't affected by the uncacheable memory issue of the GTX 970, since all 2 MB of L2 cache was enabled on the GM204 die. In fact, the entire GM204 die was enabled. GM204 had four GPC, "Graphics Processing Clusters". Each GPC had four SMMs, "Streaming Multiprocessors", and each SMM had four CUDA clusters, a "functional unit", a single functional unit, being eight four-way SIMD units, so could do the same operation on four things at once. To illustrate how highly threaded a GPU is, each CUDA cluster can have eight threads in flight at any one time. Each SMM has four of those. Each GPC has four of those and the whole GM204 chip has four of those. This is 512 hardware threads being actively executed at any one time.

Going back to the SMM, we can see the four cores per CUDA cluster: This is misleading, as these are really ALUs. A contemporary Haswell CPU had eight "cores" in every execution engine, so a Core i7-4790K, with four CPU cores, had 32 "cores" if we counted "cores" like Nvidia does. See those rows of Core, Core, Core, Core, LD/ST, SFU? Each one of those is an "functional unit" (FU). These are eight way multithreaded inside the SMM, along with four more like them. Moving up from the functional units, we reach the dispatch units and register file. The register file is the data the cores can access without penalty, so the data arrives with the instruction. Each execution unit will be working on four 32 bit values at once, we have eight of them, so we have 128 registers being actively used at any one time. The dispatch unit controls occupancy of the registers and the FUs, but you will notice we only have two of them. This is really one dual-issue dispatch unit. This dual-issue dispatch unit can issue up to two operations per clock from a warp, a warp is eight SIMD-128 instructions. Each FU is pipelined four-deep, so can be working on (ideally) four instructions at once, but rarely would be, and would cause huge overheating if it was that heavily utilised! The scheduler and dispatcher work in concert, so we can skip up a level to the instruction cache and texture cache. Anyone familiar with CPU architecture is going to instantly recognise this as a level 1 instruction cache (L1I$) and a level 1 data cache (L1D$). They would not be wrong. Previoius Nvidia architectures had aliased L1 cache to local shared memory, but not so in Maxwell. L1D$ in GM204 is 24 kB, while L1I$ is 16 kB. The shared memory can be thought of as a small L2 cache, and most applications treat it this way. Each SMM also has eight texture units, each able to sample a single RGBA value using a programmable sample matrix. Moving out from this, we have the entire GPU. The SMM joins four more like it in the GPC, and the GPC is unified by a Raster Engine. This is what handles pixel data, and sends it out to the ROPs.

We can see blocks of four render output pipelines, ROPs, alongside the L2 cache, this is a little misleading. Each ROP is 16 pixel units (which Nvidia calls "ROPs", for historical reasons) so we can output 64 pixels per clock to L2 cache, from there the cache and crossbar handle our writing out to RAM. The ROPs are absolutely critical to Maxwell. They're very closely linked to the crossbar, so data going out back to memory always goes through a ROP, even if it is unchanged by the ROP. This is done as the crossbar enforces memory coherency via L2 tag RAM, something extremely important on a massively multithreaded architecture. After the crossbar is the L2 cache and memory controllers. These are arranged in pairs, so each "memory controller" has two segments of L2$ and each of these has one memory controller, so each 32-bit memory channel going out to DRAM (GDDR5 here) has its own 256 kB L2 cache. This L2$ is only able to cache the memory associated with its own memory controller, which is 512 MB in the GTX 980 (and GTX 970). This brings total L2$ to 2048 kB, at which point it's worth noting that GK104 in Kepler had 512 kB for the entire GPU. Each 32 bit memory controller in Kepler (which it had the same four pairs as Maxwell) had just 64 kB L2 cache. It barely did anything! The GTX 970 entry covered how tightly coupled ROPs are to L2$ segments, via the crossbar. And that's GM204 in a nutshell! It's a point of inflection in GPU design, GPUs had become so large and parallel that even their own stupidly fast "the memory is the cache" memory buses couldn't keep up, so Maxwell invested heavily in caches. Maxwell was a huge generational improvement from Kepler, even delayed by a year with TSMC's 20 nm process being limited (Maxwell was back-ported to late-generation 28 nm), that Maxwell was the first Nvidia GPU to be "truly modern", bringing Nvidia up to AMD's GCN capability levels. GM204's desktop products were the GTX 960 OEM, GTX 970, and GTX 980. The GTX 960 OEM was a little notable as it had more SMs than the standard 960 and a 192 bit memory bus, though it declocked them both to very similar (slightly more) than the standard GTX 960. Mobile GTX 980 also used the GM204, in a cut-down configuration, but with 8 GB VRAM in some models. These aged very well! Nvidia reserved 8 GB on desktop for its Quadro parts, the Quadro equivalent of a GTX 980 was the Quadro M5000. One criticism of the GTX 980 is that it has not aged well. The throughput of the GPU, 5.2 TFLOPS, puts it right next to the RX 470 from two years later or the RX 570 a year after that. Both of those, however, had common and popular 8 GB versions and remain effective low-end GPUs even in 2025. The GTX 980 would out-perform both of these if it was not limited to 4 GB VRAM and Nvidia never authorised anyone to make 8 GB versions. An engineering sample of a GTX 980 with 8 GB surfaced in 2017 and the Quadro M5000 was basically a declocked GTX 980 with 8 GB. Some mobile GTX 980M parts had 8 GB, as this was an option there. Maxwell's successor, Pascal, kept the exact same caching layout as Maxwell. GP104 Pascal reorganised the SMs into pairs behind a "texture processing cluster", TPC, something which really already existed in Maxwell, but was formalised in Pascal. TU104 in Turing built on this by putting two SMs into a TPC and doubling L2$ to 512 kB per 32-bit memory controller, this doubling of L2 cache was responsible for practically all of Turing's performance uplift, where it existed. Ampere was to further modify this by moving the ROPs into the GPCs, but disabling a ROP still took out that ROP's segment of L2$ and disabling a L2$ segment took out the ROP. This particular card wasn't stable in the kid's machine, so was replaced with the GTX 970 already mentioned but intensive stress testing in the server machine could not make it produce a fault, though it did crash a 3D benchmark (by hanging the entire machine, as it had done on the kid's PC) once. The VBIOS of the Asus Strix OC card ahd a power limit of 240 watts, which corresponded to about 185 watts on the GPU die, 40 watts on the VRAM, and about 15 watts lost in VRM conversions. At 240 watts, Asus' cooler would keep it at a comfortable 78 celsius steady-state with a hotspot touching 91 degrees. GPU clock under this full load would be about 1260-1290 MHz depending on the exact workload. Core: 64 ROPs, 128 TMUs, 1279 MHz RAM: 256 bit GDDR5, 1753 MHz, 224,400 MB/s Shader: 16x Unified shader 6.7 (16 Maxwell SMM - 2,048 individual shaders) MADD GFLOPS: 5,240 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|