| [NO IMAGE YET] |

HTC HD2 / HTC Leo - 2009 HTC had long manufactured smartphones and PDAs, but usually in someone else's name, such as a network's own brand or Dell or so forth (ZTE did this 2009-2012, and continues to). The HD2 was one of HTC's first releases under its own name and what a phone it was. With a powerful 1 GHz Qualcomm "Snapdragon" QSD8250, a beautiful 800x480 4.3 inch screen and solid, metal construction its specifications were right up there with the best of anything else on the market. It was saddled, however, with Windows Mobile 6.5 Professional. Windows Mobile 6.5 wasn't bad, but it wasn't good either. The new iPhones were greatly inferior and much more cheaply manufactured, but the OS was far ahead of Windows Mobile. Even Google's early Android phones ran better as phones than the HTC HD2 did, so hobbled was it by Windows Mobile. General Magic back in the late 1990s had shown that the UX (User Experience) was king with this kind of carried device, but Microsoft never got the memo. Indeed, General Magic coined the term UX, as well as being the finest assembly of talent ever employed in Silicon Valley. The key lesson was that cheaper hardware can be sold for a much higher price if the user experience is top notch: Software above all else. Apple learned this extremely well. Rather than put up with their expensive and powerful gadgets being crippled, enthusiasts ported Android to the HD2 in mid-2010. By early 2011 it was running Gingerbread with the best of them. HTC had inadvertently enabled this by using a very similar platform to the HD2 in the Google Nexus One and HTC Desire (the Desire was virtually identical, but for a smaller screen), meaning the OS could easily be ported over. It's telling that the HTC Desire was model number A8183 and A8182, while the HD2 was T8585 and T9193, very similar. Microsoft wanted a flagship Windows Phone 6.5 device, it got one. It's just how it happened that the HD2 was best used on Android. Like the ZTE Blade on this page, a great platform hobbled by poor software had the latter fixed by the enthusiast community. | |||||||||||||||

|

ZTE Blade / Orange San Francisco The two black chip packages seen in the top image are the Qualcomm PM7540 and RTR6285. The former is a power management IC, the latter a multi-band GSM/GPRS/EDGE/HSPA transciever, fabbed on 180nm CMOS. The silver shielded chip is a Samsung SWB-A23, a 2.4 GHz transciever for WiFi and bluetooth, which comprises the Atheros AR6002 802.11b/g WiFi controller, and Qualcomm's 4025 Bluetooth adapters. As Atheros is owned by Qualcomm, then this is simply a standard Qualcomm WLAN module, manufactured by Samsung. The reverse of the PCB contains Qualcomm's MSM7227 system-on-chip (SoC) which has the Blade's 600 MHz ARM11 CPU core and 512 MB of system RAM as well as the baseband processor. Nearby is a Hynix H8BESOUUOMCR flash RAM chip, again 512 MB. A TriQuint 7M5012H quad-band GSM/GPRS/EDGE/HSPA power amplifier completes the Blade's chippery. The CPU is only a 600 MHz ARM11, so no official Flash Player, but a recompile of Flash 10.2 and 10.3 did work on the ARMv6 instruction set. It'd a testament to how important Flash used to be that a version of it leaked out, was rebuilt to be an installable APK, then released. Be warned that no Flash 11.x versions ever existed for ARMv6, those that claimed to be Flash 11 were actually the Flash 10 leak renamed and often with some very unwanted "extras". Qualcomm's MSM7227 is the GSM version of the CDMA MSM7627 and was phenomenally popular. The processing performance of its ARM1136J-S core was impressive for the day, the 256 kB L2 cache onboard helps make this one of the fastest ARM11 devices on the market - but the Cortex A8 which came after was much, much faster. Qualcomm dropped an Adreno 200 GPU in the mix, which again is pretty much bottom of the barrel, but will handle the UI and video playback capably. Adreno 200 was an unchanged Imageon Z430, which Qualcomm bought from AMD as AMD had a firesale of ATi's graphics technology. AMD claimed it was a version of the Xbox 360's Xenos processor, and indeed it was. Imageon before the Z430 and Z460 was a result of vapourware ATi bought from BitBoys, a Finnish tech-startup known for lofty claims and no product. Z430 and Z460 were derived from ATi's R520 technology, actually a little more advanced than the Xbox 360, but they were the smallest thing which actually could be made from that technology. Imageon Z430 had one VLIW-5 unit with five ALUs running at 220 MHz. Most MSM7227s are good to about 720 MHz and this one is no different, stable at 712 and 729 MHz. The MSM7227 is also used in the HTC Wildfire, Legend and Aria, Samsung Galaxy Ace, Samsung Galaxy Mini, Sony Ericsson's X10 Mini and Mini Pro, RIM's Blackberry Curve 8520 and many, many others. Other hardware includes a digital compass, 3 axis accelerometer and gyroscope, ambient light sensor, proximity sensor, a GPS reciever, and an easily replaceable 1250 mAh battery, which will provide in my experience all-day life with average use. Playing video not so much, at full screen brightness, you'll get about three hours of H.264 playback with the excellent MX Player. This was largely due to the high resolution screen: The standard for an entry-level device back then was around 320x240 or so, this had 800x480. The ARM11 core had nowhere near enough oomph for software H.264, so the Adreno 200's video decoder was necessary. It could not handle B-frames and only dealt with H.264 Main Profile. The total device is 116 mm high, 56.5 mm wide and 11.8 mm deep. For comparison, an iPhone 3GS (Apple's top end device when the Blade was released) is 116 x 62 x 12, has a much poorer display and the Blade has double the memory. The headset connector is wired according to industry standards: Tip-ring-ring-sleeve. Tip is left, first ring is right, second ring is microphone, sleeve is ground. Be careful here as Apple, in its lack of wisdom, decided to swap the microphone and sleeve so commonly available high quality headsets wouldn't work on an iPhone and you'd have to use Apple's poor quality unit instead. Headsets compatible with an iPhone will not work with the ZTE Blade or many other handsets, do check before buying. The headset supplied has the most awfully quiet earbuds ever. Replace it with a proper in-ear set as soon as possible if your phone is to be an MP3 player. As a phone, the ZTE Blade here is branded "Orange San Francisco" and is a pretty average entry level Android, nothing at all remarkable. It's when you install one of the many third party firmwares, such as CyanogenMod 7, when the Blade comes alive. For a phone of this price, the 800x480 capacitive touchscreen, fronted by real glass, is far and away superior to its competition, almost all using low resolution 320x240 or 480x320 screens. The 267 pixels-per-inch puts it almost in "retina display" class. (A brand Apple was using for 300 PPI+ displays, most phones were around 150-200 at the time) With CyanogenMod7, the UI is fast and snappy as the CPU governor slams the CPU to full on wake from sleep, a common problem with Android devices is UI lag as the CPU doesn't clock up to full immediately but over the course of several seconds. None of that with CyanogenMod7. Community support was exceptional, only perhaps the HTC HD2 (HTC Leo) had such a vast and diverse array of offerings. In general use, the phone feels much better than its price and hardware would indicate, largely because of how fast CyanogenMod7 is. In subjective UI use, it's easily as fast as a Samsung Galaxy S2. The CPU is similar to the one in the Apple iPhone 3G, though around 180 MHz faster. The phone does have one very disappointing feature, though. Its camera. It's a 3.2 Mpx variable auto-focus sensor but the images at 2048x1536 are atrocious. I'll let pictures speak louder:

While a difficult scene, the camera manages to completely wash out the sky and show an enormous amount of glare, causing the foreground to be underexposed. The glare is caused by the plastic cover over the camera lens, so I popped the back off the phone and took a similar image:

The exposure is better, there's not anywhere near as much glare, but fine details are still completely lost, the image looks like it's gone through a median filter, as you'll see in the 100% crop below.  Low light performance is so poor that it's not even worth trying, and the phone has no flash. Daniella will demonstrate how bad low light performance is (image has been levels-corrected).   There's more noise than a football stadium on a Saturday and before the levels correction, the image looked very washed out. Colour saturation and rendering is, to a word, pathetic. The camera isn't very good, but for a £100 phone (in 2010, that is) which could be had for around £50 on Ebay in 2012 we'll forgive that. The camera is also capable of 480p video but you'll quickly wish you hadn't bothered as the image quality is truly dire. In summary, this phone has an excellent screen, wonderful community support (some nutters even have Android 4.3 Jelly Bean running on it), a responsive and accurate digitizer as well as the full suite of sensors you'd expect and is just let down by a very poor camera. Some European models had a 5.0 megapixel camera which was apparently much better. |

|||||||||||||||

|

Samsung Galaxy S - 2010 Before the Galaxy S, Android phones were a bit weird. Sure, HTC, et. al. were pushing them out, but they were low-end to mid-range things. Nobody thought "Hey, Apple's eating our lunch here, we need a high-end phone." The high end of the market has a massive profit margin. At the time, the latest and greatest from Apple was the iPhone 4, released a few days later in June 2010. How do they stack up?

It was pretty close. iOS4.0 was demonstrably inferior to Android 2.3 "Gingerbread", but the iPhone4 will now run iOS 7.1 (badly, mind). Samsung didn't upgrade the Galaxy S beyond Gingerbread, but third parties released updates all the way to the most recent 4.4 "KitKat". This required a moderate amount of technical know-how. Apple saw Samsung's new devices as direct threats and began a legal campaign to try to take Samsung out of the market - Innovation wasn't going to work (Apple cultivates an image as an innovator, but has historically been quite poor at it), so litigation was the choice. The proceedings are still proceeding as of mid-2014. Much was said of Samsung's OLED display. It was vibrant and black was black, without the dull glow of most other phones, but it had a blueish tint to it and colour calibration plain wasn't done. This one came to me with a broken USB port. It'd charge with difficulty, but wouldn't do data. This meant I couldn't readily hack the OS to get Android 4.2 on it since the tools used (Mostly Odin) worked over USB. "Mobile Odin" was bought, an app on the phone itself, but it failed to flash the ClockworkMod Recovery. During attempts to fix the USB port, the port was destroyed and so a great phone came to an inglorious end. | |||||||||||||||

|

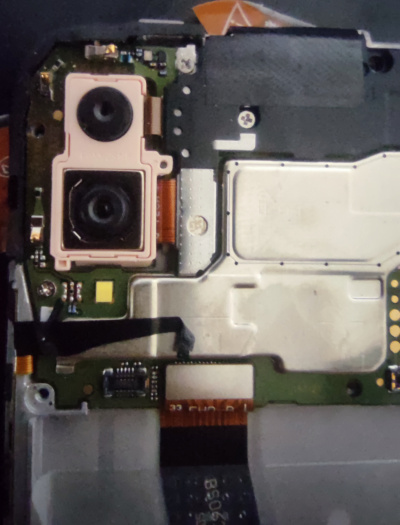

Samsung Galaxy Mini 5570 - 2011 This guy came in for a replacement screen or maybe some manner of other work, it's not well remembered. It's a Qualcomm MSM7227 at 600 MHz (later retroactively called "Snapdragon S1") with 384MB RAM, a 320x240, and just 160 MB of onboard storage (in a world where 1 GB was considered the low end). It was very, very low-end. Tellingly, it was around the same time as the ZTE Blade, cost more, the specs were lower and nobody seemed to care much about it. The MSM7227 was ARMv6 (and an ARM11 core) so was really quite slow, while the Adreno 200 GPU was old by the standards of the day, but Qualcomm had yet to do much GPU development and this was relatively unchanged from the Imageon they'd bought from AMD. Samsung, like anyone else using the MSM7227, dropped support for it after Gingerbread (Android 2.3) as the GPU drivers had not been updated by Qualcomm to support Android 4.0, nor were they ever going to be. CyanogenMod hackers got the older GPU drivers somewhat working, enabling Androids 4.0, 4.1 and 4.2 to work. Slowly. The PCB shot shows us clearly the components. The MSM7227 is mounted atop the 384 MB RAM. Just above it is the Samsung storage chip. Swinging to the right shows us a Samsung 77554-12 RF amplifier, beneath that is the Qualcomm RTR6285 GSM radio (eight UMTS bands, four EGPRS/EDGE bands) and GPS receiver, first announced in 2006. Immediately below the MSM7227 is the Maxim 8899G power management IC. Above the micro-SD slot, to the left of the storage IC, is an Atheros 6003G WiFi (802.11n, 2.4 and 5 bands), next to a Broadcom BCM20780C, part of the BCM207 range of Bluetooth 4.0+EDR transceivers. |

|||||||||||||||

|

Intel AZ210 - 2012 Manufactured by Gigabyte (yes, the Gigabyte most commonly known as a tier-1 PC motherboard maker and video card vendor) for Intel, and sold in India as the Xolo X900 and in Europe by Orange (now EE) as the San Diego. A Russian release was the Megafon Mint. It's based around Intel's "Medfield" Atom Z2460 at 1.6 GHz and has pretty much everything a top of the line flagship smartphone of 2012 would need. It was, however, released with Android 2.3.7 (Gingerbread) and only Xolo quickly released the Android 4.0 (Ice Cream Sandwich) update which Intel made available. Orange did later release 4.0.4, but only after ruining it with their hideous theme and built in apps. Notably, it has 1 GB RAM, double the standard for the day, 16 GB flash storage (again double), a high density 1024x600 4" display, micro HDMI port, NFC, 8 megapixel rear and 1.3 megapixel front cameras. Intel seemed to be heavily subsidising it, as it was available for £120 off-contract! A lot of enthusiasm built up around the device as it was powerful, cheap, sported average in-class battery life (long a bugbear of mobile x86) and excellent performance. This enthusiasm was soon to evaporate. A micro-SD slot is disabled in software and the bootloader is hard-locked. Installing custom ROMs or modifying system boot settings is impossible. Determined hackers have managed to enable the micro-SD slot, and speculation was that due to how the internal storage is partitioned, Gingerbread couldn't use it and an SD at the same time. While this may be true, Android 4.0 could, but the slot remained disabled. As vendor updates were released (only a small few), the methods that enthusiasts used to gain root access and enable the disabled features were removed. Fully updated, it is impossible to root or otherwise modify the device. The Android x86 developer community, which had been greatly anticipating this device, sighed sadly and went back to ARM. Intel refused to unlock the bootloader, and did not provide a reason. The Motorola RAZR I and ZTE Grand X were also Medfield devices and have unlockable bootloaders. It could, however, be that the AZ210 was an Intel device and just mildly customised for different markets, while Motorola and ZTE could do whatever they want with their own devices. | |||||||||||||||

|

LG/Google Nexus 4 - 2012 While the Nexus One, Nexus S and Galaxy Nexus were competent or even good smartphones they weren't what you'd usually pick as your primary device. They cut corners, noticeable ones, as HTC and Samsung really didn't want Google treading on their toes. They were overpriced "developer devices". So the Nexus One was a customised HTC Desire, the Nexus S a customised Galaxy S, and the Galaxy Nexus was a customised Galaxy S2 (and was sold SIM-locked in some areas!) Generally the Nexus devices were in some way slightly inferior. The Galaxy Nexus couldn't record 720p video, for example. Nexus devices also do not have micro-SD storage. Initially, the Nexus 4 appeared to be uncrippled. It was of premium construction, a beautiful glass back with a "holographic" chatoyance effect, but had LTE (4G) disabled, since the chipset used was a very early LTE chipset and didn't support many bands. It sold about 375,000 (per serial numbers) and was a customised version of the same platform which the LG Optimus G also was (LG would later refer to the Optimus G as the "G1"). The Nexus 4 was, however, deliberately hobbled. Qualcomm bins its chips according to their quality, higher quality chips will run at higher frequencies and lower voltages, so will use less power. This is known as "processor voltage scaling", or PVS. Optimus G used expensive PVS3, PVS4 and PVS5 parts. Most Nexus 4s were PVS0, 1 or the rare 2. The higher the number, the better. Nexus 4 also used a poor (cheap) camera, while it was 8 megapixels, it had a tiny sensor, poor lens and delivered substandard results. The metal internal chassis of the phone was deliberately air-gapped away from the processor, so couldn't work as a heatsink, meaning the processor would overheat sooner and throttle back, reducing performance. Google said it was to control skin temperature, but I didn't notice much trouble once a piece of copper foil closed the gap (this is the same on most modern handsets, again for skin temperature reasons). None of this really mattered. Google cut corners where corners can be cut, the PVS scaling troubled only overclockers and would cost a typical user just 10 minutes of battery life per day. The camera was disappointing, but in good lighting it was as good as anyone else's. On launch, the Nexus 4 sold out in 15 minutes as it hit UK shores at £239 for the 8 GB and £279 for the 16 GB model. A comparable phone such as the Galaxy S3 was around double that. Indeed, the Samsung Galaxy S4 was little better than an uprated Nexus 4. | |||||||||||||||

|

LG/Google Nexus 5 - 2014 The photos are actually a Nexus 4! I'll fix those soon. The Nexus 4 was a version of LG's Optimus G, and the Nexus 5 was a version of the Optimus G2 (the "Optimus" was sometimes referred to, but usually dropped) or just G2. It sported an absolutely beautiful 1920x1080 display, a much improved camera, indeed one of the best cameras used in a smartphone on release, it had a powerfully rated speaker (but only one), ran the top of the line Qualcomm Snapdragon 800, 2GB of LPDDR3-1600 memory, 16 or 32 GB storage and was just £300 for the 16 GB version. The camera had problems to begin with, yet the LG G2, which used the exact same optics and only upgraded the sensor to the 13 Mpx version of the same series, was applauded for an excellent camera! It had a high-end DSLR feature known as optical image stabilisation (OIS): It used magnets to move the imaging elements around to compensate for slight jitters of the hand holding the camera. Google implemented the controlling software very poorly. OIS means that blurring due to motion is eliminated, so any blurring has to be caused by focus - before this, smartphones couldn't properly focus if they were in motion and many algorithms were developed to compensate for motion blur. Google dumped these and left autofocus continually on, focus would never lock (unless manually set) and would attempt to compensate any blur, meaning rapid motion would knock the camera out of focus. This meant that if the camera did lose focus, it would then do a full sweep to gain focus, which could be slow. On initialisation, it would also do a full sweep to find focus, where software-assisted non-OIS cameras could work out how to move the lens much faster. The camera could also do longer exposures, since it wouldn't blur out due to small jitters, so help low light performance. Google didn't do this. Poor software effectively crippled the best camera in any smartphone. Google realised the deficiencies and released quick-fire Android updates to rectify the issue: The camera was one of the Nexus 5's flagship features, but updating the camera's driver meant updating system components, needing a full firmware update. Google had also implemented a very powerful computational photography mode, its "HDR+". It takes a fast burst of images across an exposure sweep, then extracts the best parts of each one to both provide a much larger dynamic range and much clearer images. Noisy areas can be "patched over" with clearer areas from one of the other images, using the Qualcomm Hexagon DSP to accelerate the process using a convolutional neural network: Today we'd call it "AI", and here was the Nexus 5 doing it in 2014. Google was at least three generations ahead of everyone else with the Nexus 5's HDR+ mode and only continued to iterate on this success with the later Nexus and Pixel devices. Google never quite ironed out the bugs with the Nexus 5's camera. The camera driver would often crash and the phone need to be restarted before any camera function would work. This continued to all versions, though Android 5 was probably the most stable in my experience. Nexus 5 was still plenty hardware capable of later Android versions than the 6.0.1 it officially topped out at, but Qualcomm refused to update the Snapdragon 800's "Board Support Package", which was essentially the hardware drivers for Android 7's updated requirements. There was no good reason, as the Snapdragon 801 and 805, which were the same hardware generation, did get the updates! Never ones to be dissuaded, the Android community backported the drivers from the Snapdragon 805 (which was largely identical), worked out how to repartition the onboard storage to give more space for /system (1.5 GB seemed optimal) and ported Android 9.0 to the device. Other tweaks were stereo audio, using the earpiece as a top speaker, since it seemed the earpiece speaker's audio amp was massively overspecified for its role (maybe LG intended this all along...) and backporting Nexus 5X camera features. Custom development for it seemed to peter out in 2018 and 2019, with Android 8.1 being most useful as a daily driver (Lineage OS 15.1) and some Android 9.0 builds which did work, but had issues, notably with BlueTooth. By this point, the Nexus 5 was actually out-performing similar builds on the Nexus 6, which used a Snapdragon 805. In 2018, however, Nexus 5 had truly run its lap and its 2 GB RAM was starting to be the limiting factor. The Nexus 5 was one of the greatest phones ever made, represented an inflection point in camera capability, and its wide success made Google think about maybe taking the Nexus mainstream - Which it did, as the Pixel. Being mainstream, the Pixel series was more locked down, less hackable, less customisable, less "reference design", and more intended to be a gateway to the Google ecosystem. This was a direction Google had been moving with the Nexus anyway. Nexus 4 had been aimed at end users, rather than the "tech-savvy", for much of its life, and Nexus 5 was widely available in ways previous Nexus devices had not been. |

|||||||||||||||

|

Moto G5 Plus - 2017 The Moto G, in 2014, was something of a surprise success. It was "good enough" in every area, a good enough 1280x720 screen. 1 GB RAM and 8 GB storage was good enough. The camera was good enough. The Snapdragon 400 (4x 1.2 GHz A7s) was good enough. The price was only £100 in the UK, €140 in Europe. It sold extremely well. Motorola's story is an interesting one. Galvin Manufacturing Corporation, founded in 1928, Illinois, and sold "battery eliminators", which allowed a radio to run from mains electricity. By 1930, battery eliminators were obsolete, and Galvin had his company to focus on in-car radios. The product name was "Motorola", from that "-ola" was a popular ending for a corporate identity (Victrola, Crayola, Moviola), and "Motor" for the motorcar. Galvin offered the first in-car radio in 1930. By 1940, Motorola had established itself as the USA's premier radio brand, and Galvin Manufacturing Corporation changed its name to Motorola. Skipping a bit, Motorola got into semiconductors in the 1970s, developing the devices which powered the microcomputer revolution, the MC6800 and MC68000. Motorola in the 1980s invented the "Six Sigma" quality process (which focuses on eliminating variation). This defines what an opportunity for a defect is, and aims for 3.4 defects per million opportunities. This business process approach helped Motorola develop the first cellular telephone. By 1998, cellular telephony was two thirds of all Motorola's gross revenue, but had just been overtaken by Nokia as the world's biggest seller of cellular devices. Over the 2000s, Sony-Ericsson, Research in Motion, and even Apple Computer were to stride ahead of Motorola, which sold its cellular division to Google under the "Moto" brand, who stripped it of what was useful and sold the rest to Lenovo. Moto's naming went a bit awry for a while, maintaining the "Moto G" name, as it had been a winner, with "2nd Gen" and "3rd Gen". A Moto E came, which actually fit beneath it, and then variants on the G series, so the G4 Play, G5 Plus... Which is what we're here for today. The G5 Plus used a one piece PCB, very unusual in devices of that era, with a somewhat high component count: Just count all that shielding! The one piece PCB limited the space devotable to a battery, so Lenovo made the device a little deeper to fit in a 3,000 mAh battery. In 2017, the company I work for was giving out Moto G5s as company mobiles. Mostly because they were cheap: £110 got you one new. With a bit more cash to spend, like submitter Bryan McIntosh had, you could get the similarly designed G5 Plus, a mid-range device for around £220 (€240). Where the G5 was "good enough" (it was actually a little worse than the G4 in some areas), the G5 Plus "pretty decent". It had four 2.0 GHz A53s in its Snapdragon 625, pretty decent. It had options for 2/3/4 GB RAM and 32/64 GB storage. Bryan went for 2 GB and 32 GB, pretty decent. It had a simple 12 MP phase-detection rear camera, pretty decent. It had a 5.2" full-HD screen, pretty decent. To actually beat this, you had to go to a flagship or an upper-mid-range device. Bryan tells us This phone is my daily driver; the camera is about as good as my Lumia 830 was, and the software load is close to stock. It shipped with Android 7.1, and now has been upgraded by Lenovo to 8.1. Lenovo is incredibly slow with security updates; it's still stuck on the February 2019 security update, and it looks like Lineage OS stopped updating for this phone back in April of 2019. It's got 32 GB of storage and 2 GB of RAM, and works reasonably well as a solid all-around phone.At the same time, this author was sporting a Huawei P10, Huawei's 5"-class flagship, which wiped the floor with the G5 Plus in every way, a touch more expensive (£260/€280), but almost completely unavailable in North America. Huawei also uses a significantly customised Android, while the Lenovo's Moto series typically keeps the customisations to a minimum. As someone with a Nexus 7, Nexus 4 and Nexus 5 in device history, clean Android certainly does have its own draw. To go off on a technical bent a little, the G5 Plus' Snapdragon 625 (MSM8953) sports eight A53 cores - It's almost identical to the Snapdragon 430 (MSM8937, in the G5), but clocks higher and is made on the lower power 14 nm process. Qualcomm doesn't make it very public, but the 625 appears to be a die shrink of the 430. The Adreno 506 GPU in the 625 only differs from the Adreno 505 in the 430 by running 200 MHz faster, at 650 MHz. |

|||||||||||||||

|

Huawei P Smart POT-LX1 - 2018 The "P Smart" was Huawei's entry level of 2019, low cost, feature packed. Huawei used its own Kirin 710 (TSMC 12 nm, replaced the 16 nm Kirin 650) system-on-chip with four 2.2 GHz A73s and four 1.7 GHz A53s. Graphics was handled by four Mali G51 clusters and memory was 3 GB of LPDDR4. The screen was quite a high point for the P Smart, with a 1080x2340 6.2" display. The camera was a very basic 8 Mpx unit (a 16 MPx version was available) without any focus assist or image stabilisation. There isn't really much else to say about this guy. The phone came in for a screen replacement (an 8 year old throwing tantrums and phones) and left intact. Instead, I'll talk about internal design. Behind the screen at the top you usually have the PCBs. These are typically stacked, some designs have flexible PCBs (the Nexus 5 is a good example here) as daughterboards. The large silver areas are actually shields covering components to improve their RF characteristics. They're not so much to prevent interference (in either direction) but to allow high frequency components to remain within good impedance, removing them can cause problems. They're usually linked by either grounding to the PCB or conductive copper tape so they all remain at the same ground reference. It's also become a bit of a standard that the bottom left of the PCB contains the screen and digitiser control, which controls the screen (usually using embedded DisplayPort, eDP) and the digitiser is the almost-invisible conductive overlay of the screen which tracks touch input. On modern phones, the digitiser is actually built into the screen itself, rather than being a separate component overlaid or bonded. This is why a "cracked screen" is usually just the protective glass cover and doesn't stop touch from working as often used to be the case. | |||||||||||||||

|

Xiaomi Mi 8 - 2018 I'm sometimes asked why I don't often use photos of the exterior of these devices. My response is "Why do something everyone else who discusses them does?" as it's triflingly easy to search up what any device looks like on the outside. The Xiaomi Mi 8 was Xiaomi's first real attempt to break into the Western market. Earlier devices from Xiaomi had been quite well regarded and 2017's Mi 6 had been moderately popular. In China, the naming would be rendered "Xiao Mi 8", but such wordplay on the branding doesn't really work in the West. Xiaomi hired many "brand ambassadors" and lavishly spent on media "influencers", which were mainstream by 2018, to promote the brand in the West. Xiaomi had long been a contract manufacturer and had good working knowledge of how to make a good Android smartphone. Indeed, it had used its Poco and Redmi brands in the West for some time, typically emulating the design of Samsung or Apple. MIUI, Xiaomi's Android skin, is heavily Apple influenced, for example. Indeed, the Mi 8 seen here has a screen almost indistinguishable from the iPhone X, using the same weird (and downright ugly) screen-top notch, similar screen dimensions (it's a bit larger than the Apple) and a very, very similar camera arrangement. No, I need to better qualify that, "Almost identical camera arrangement". The iPhone X used two 12 MPx sensors, a wide 28 mm (equiv.) f/1.8 1.22µm main camera, and a telephoto 52 mm, f/2.4 1.0µm secondary camera. The Mi 8 used two 12 MPx sensors, a wide 28 mm (equiv.) f/1.8 1.4µm main camera, and a telephoto 56 mm, f/2.4, 1.0µm secondary camera. At this point "copying" is probably too weak a word! That said, Xiaomi's camera arrangement was superior. The main camera had larger sensor sites, so captured more light, and was capable of 960 FPS slow motion at 1280x720. It had four axis optical stabilisation and generally produced better results in more challenging conditions. In hardware, Xiaomi had also produced a stronger device than the iPhone X. Qualcomm's Snapdragon 845 was equivalent to Apple's A11 Bionic in CPU performance (and used slightly less power, but this was very close) and video performance was equally tight, with the Snapdragon usually taking the lead, as Qualcomm's Adreno GPU tends to do with any competitor. Finally, Xiaomi offered a 13.1 watt-hour battery next to Apple's 10.3 Wh. Specs tended to pass back and forth between them. Xiaomi's audio quality was a bit better, Apple's screen was a bit brighter, Xiaomi's front-facing camera was a lot better, Apple's environmental protection (IP67) was a lot better, the Xiaomi had a bigger screen, the Apple had faster storage, Xiaomi gave a fast fingerprint sensor, Apple gave a 3D touch system, Apple had Face ID, Xiaomi had infrared "see in the dark" Face ID, Xiaomi was 0.1 mm thinner... You could go on all day. The result was that a Chinese manufacturer and designer had walked up to Apple and punched it square in the face. Mi 8 wasn't a cut-rate knock off of the iPhone X (which did exist, in large numbers), it was competing not just in looks, but also in specifications, performance, features, and quality. This had long been Samsung's ground, but Samsung used its own design language or Huawei's ground (and Huawei is its own, similar, story!) but never had either of them produced a device so similar to a current iPhone that its specs sat right alongside. Nobody, before Xiaomi at least, had that kind of confidence. Who would be stupid enough to put their device right alongside an iPhone? It was inviting defeat, ridicule. Yet, Xiaomi did exactly that and with enough swagger to pull it off. Ultimately, Xiaomi had produced a competitive flagship. There was a more expensive "Mi 8 Pro" version, which was actually a Western ("Global", to Xiaomi) version of the Mi 8 Explorer Edition which had a weirdly transparent back showing fake parts (cynically) or an artistic rendering of the stylised interior (generously) and changed the design to have an in-display fingerprint sensor instead of a rear-mounted unit. That was it. The memory and storage overlapped the regular Mi 8, the Pro wasn't available widely for very long, and other components were identical. The Mi 8 was also just under half the price of competing designs from Samsung, Huawei, and Apple. Reviewers typically applauded its display (a strong Super AMOLED design) and performance, but criticised the lack of an ingress protection (IP) rating. In my testing it performed similar to an IP43, with protection against solid particles but not small dust (the speaker grilles) and able to withstand water splashes. Anyway, this was used as a daily driver right until March 2023, when it seemed the flash module in it failed. One night it just turned off and wouldn't turn on. Connecting it to a PC found it was in EDL (emergency download) mode, but wasn't responsive in that mode for re-flashing firmware. Use of MiFlash (with an authorised account, and with de-authed Firehose ELFs) failed and use of QFIL similarly failed. Like all big battery phones, it ran the battery at an extreme level, for capacity, not endurance, and after around two years the battery had degraded to around 2/3rds of its designed capacity, and was replaced. Not a terribly difficult operation on the Mi 8, it had to be said. For most of its life it used slightly customsied Xiaomi.eu firmware (a weird semi-official thing) before being moved to Pixel Experience a little later. Non-Xiaomi firmwares almost always disabled the telephoto camera and always disabled the infrared face-ID camera (which was an actual camera and could be used by suitable camera apps for near-IR!) but were good in other ways. | |||||||||||||||

|

Xiaomi Mi 10T Pro 5G - 2020 The "T" sub-brand of Xiaomi's flagship "Mi" line means it's a slightly cut back "flagship killer". It has all the top features, but cuts corners to reduce price. In the Mi 10T's case, this was the display, which was an IPS LCD instead of the high end OLED. The difference between the Mi 10T Pro and its "non-Pro" was a 108 Mpx camera vs a 64 Mpx camera. These obscene megapixel counts were useless in practice, as a full resolution shot was far too noisy. The Samsung-supplied sensor, however, did quad-pixel binning to clear noise. A regular full resolution image is 27 megapixels and each pixel has four times the samples as a basic Bayer pattern would predict, causing far less noise than would otherwise be expected. This is mostly undone by very poor zoom performance. You'd think a camera with access to such a massive sensor grid could do huge zooms, but a 30X zoom is a blurry, indistinct mess. A 5x zoom (roughly 50mm equivalent) is about the highest usable. DxOMark considers it a 118 rating. The three rear cameras were a main, large lens, 108 Mpx unit, a smaller 13 Mpx ultra-wide ("0.6x zoom") and a 5 MP macro lens, capable of focusing to around 10 mm. The front camera is a 20 Mpx unit with a tiny aperture useful only in very good lighting conditions. Xiaomi made much of the "liquid cooling chamber", which was a very thin copper heatpipe from the SoC area to the screen frame. When I replaced the screen on this, the replacement lacked the heatpipe (the Mi 10T lacked it, the Mi 10T Pro had it, but the screens were interchangeable) and no performance difference in any benchmark could be measured. The SoC in control here is Qualcomm's Snapdragon 865, fabricated on TSMC's N7P process with 10.3 billion features and a tiny 83.54 mm^2 area. It used a 64 bit memory bus (4x 16 bit channels) to LPDDR5 in this case. It had four ARM A77s (one of them a "Prime" core with 512 kB L2 cache) with 256 kB L2 cache, and four A55s, each with 128 kB L2 cache. The eight CPU cluster is served by a 4 MB L3 cache, and the entire unit has a 3 MB L4 cache tied to the memory controllers, which the GPU can also use. The Adreno 650 GPU peaks at 1.2 TFLOPS FP32 but cannot remotely sustain that. |

Hattix hardware images are licensed under a Creative Commons Attribution 2.0 UK: England & Wales License except where otherwise stated.

Hattix hardware images are licensed under a Creative Commons Attribution 2.0 UK: England & Wales License except where otherwise stated.