| Generations |

Pre-3D (-1995) Texture Mappers (1995-1999) Fixed T&L, Early Shaders (2000-2002) Shader Model 2.0/3.0 (2003-2007) Unified Shaders (2008+) Featureset determines where a card will go, not its year of introduction |

|||||||||||||||||||||

|

S3 ViRGE DX 4MB - 1995 As the Sparkle SP325A (which used the ViRGE and ViRGE DX/VX/GX interchangably), this card is one of the famous "3D Decelerators" which actually made 3D slower than plain software rendering. The ViRGE was pin-compatible with the S3 Trio64V+ (and used the same video core) and quick to market since it could use existing card designs. On paper, the ViRGE was a powerful part, able to texture a pixel in 2 cycles and running at 80MHz (40Mtx fill rate) - it was limited to reading one byte from memory per clock, so could only read half a texture sample per clock (which ironically were very similar constraints and performance figures to the Playstation). However the hardware 3D of the time was expected to give better quality than software rendering, so bilinear filtering and z-fogging were expected. As ViRGE was a two-cycle renderer then performance fell off exponentially as more passes were added. A single point-sampled unmipmapped pixel (e.g. GL_NEAREST) took two cycles, but a bilinear filtered pixel (e.g. GL_LINEAR_MIPMAP_NEAREST) took eight passes (as opposed to one on the Voodoo Graphics). Trilinear filtering (e.g. GL_LINEAR_MIPMAP_LINEAR) could not be performed, but if it could, it would have taken sixteen passes. ViRGE support was added to some games, most notably Descent 2 and Tomb Raider, but S3's lack of OpenGL support (it could not run Quake) and Direct3D support to favour "S3D" (as they would try "MeTaL" with the Savage series) condemned it to a quick death. The ViRGE GX2 was made electrically compatible with 66MHz PCI and AGP, though it did not use any AGP abilities. Later the ViRGE embarrasment would be quietly hidden by S3 and their Trio3D cards (which were renamed ViRGE GX2 cores, some of them being actually printed with ViRGE GX2 and covered with a sticker on the chip) did not use the name at all. Due to one cycle EDO RAM timing, ViRGE's memory performance was excellent and it was based on the industry leading Trio64V+ GDI accelerator, making it by far the most powerful 2D accelerator available at the time and still leading the field until the Voodoo Banshee arrived. Core: 1 pipeline 0.5 TMU (one texture sample takes two cycles, 40 million pixels per second) RAM: 64 bit, asynchronous EDO (40ns/63MHz), ~264MB/s Shader: Disastrous triangle setup engine was slower than MMX-assisted software. Drivers: Included in WindowsXP, 2000, ME, 98, 95. As SiS 325/Trio64 in most Linux distributions. |

|||||||||||||||||||||

|

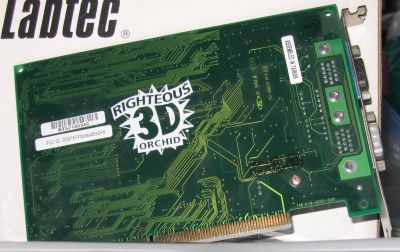

Orchid Righteous 3D Voodoo Graphics 4 MB - 1996 1996's Voodoo Graphics saw the world taken by storm. 3Dfx (before their rebranding to "3dfx") positioned the SST1 product as something for games arcade machines, the clearly next generation visuals on things like San Francisco Rush (on the Atari Flagstaff arcade system board) gained a lot of media attention. It wasn't until 1996/7 when it became feasible in the consumer market, due to DRAM manufacturers hugely overestimating the memory needed for Windows 95 and causing a huge drop in DRAM prices. The price of the SST1's 4 MB memory dropped to a quarter in just a few weeks and the consumer Voodoo Graphics PCI was born. Before SST1, most 3D had been largely untextured, the problems being solved were geometry based. 3Dfx knew that a host CPU was fast enough to handle geometry in software so long as the hardware could handle simple triangle setup, but that the real problem was texture mapping, something which required an awful lot of memory bandwidth and a huge array of parallel multipliers, a texture mapping unit. Where SST1 succeeded was in its performance. ViRGE and many of Voodoo's competitors could not perform the texturing and fogging in a single pass, indeed many took multiple passes for just the texture, but Voodoo could render a single textured surface, fogged, in a single pass. SST1 was a real chipset, spread across two chips. In our image, the upper chip is the texture mapping unit (TMU), the lower is the Frame Buffer Interface (FBI). It gained fame not because of its awesome performance (there were others) but because of how widespread it became. 3Dfx implemented the Glide API, not a huge hardware-agnostic tour-de-force like Direct3D or OpenGL, but a small, simple API which implemented what the SST1 could do and nothing of what it couldn't. Glide handled the silly low-level things like initialising and programming the Voodoo Graphics card and its SST1 chipset and let game designers get on with designing games. Voodoo Graphics remained one of many until 3Dfx scored a huge coup. Working with id Software, 3DFx released a "minigl" driver for the Voodoo Graphics, which implemented the parts of OpenGL that "GLQuake" used. The result was Quake in 640x480, fully texture mapped and running at a smooth, fluid framerate. After that, Voodoo became a mark of quality on any game which supported it. Submitter Calum from the Ars AV forum bought it for £200 (GBP), possibly in early 1997. Games playable at the time would have been many, but for most it was Tomb Raider and the excellent Mechwarrior 2. This early card controlled the switching between external VGA (from a video card) and the Voodoo's 3D with a pair of relays near the back of the card (the Omron components), so entering 3D mode resulted in an audible click as the switches fired. Voodoo was capable of standard 2D output, it had a VESA VBE2.0 linear frame buffer interface, but it had no acceleration of this whatsoever, so performance would have been abysmal. Core: 1 pipeline, 1 TMU, 50MHz (50 million texels per second) RAM: 64 bit (x2) asynchronous EDO DRAM, 400 MB/s peak Shader: None |

|||||||||||||||||||||

|

Matrox Mystique 220 4MB - 1996 The infamous "Matrox Mystake", Matrox's first integrated 3D card, this one is likely the 4MB variant, it could be upgraded to 8MB by adding a RAM card to the connectors marked J1 and J5 which were standard across all Mystique cards. Predictably, the "Memory Upgrade Module" sold extremely poorly. This one, as you can see, uses the MGA1164SG, thus making it the '220' variant which was released in 1997 to match the RAMDAC speed of the Millennium, 220MHz, the original Mystique had a 170MHz RAMDAC so making customers using UXGA 1600x1200 screens be forced to pack aspirin at a 60Hz refresh rate and 256 colours; This alienated more than one Mystique user, given Matrox's typical userbase. Matrox pitched this card at gamers who, quite rightly, laughed Matrox out of town. The Mystique can do mipmapping, but cannot filter the textures, which defeats most of the point of mipmapping. Visual quality on the Mystique was pretty dire. Like the ATI Rage Pro (which could filter textures), it also could not perform alpha blending, instead using texture stippling to approximate it. Due to the Mystique's lack of texture filtering meaning it used only a quarter of the memory bandwidth of a card using bilinear filtering, it handily out-performed the Rage Pro and ViRGE but what didn't beat the ViRGE? With software disabling texture filtering on the Rage Pro, ATI's card took a substantial lead over the Mystique which just goes to show that tests must be performed on even ground. The Mystique also couldn't do W or Z based fogging and, as it could not perform per-pixel lightning, it was unable to do perspective correct specular, alpha or fog but in its defence, very few cards of that era could solve the lighting equation for each pixel, instead they did it on a per-triangle or per-vertex basis and interpolated outwards or not at all. 3DFX's Voodoo Graphics wiped the floor with it, with or without bilinear filtering. Most Mystique owners, like their ViRGE bretheren, added a 3DFX Voodoo or Voodoo2. The Mystique introduced the 2D GDI accelerator and memory interface which would be used in the G100, G200, G400 and G5x0 later, only to be replaced in Parhelia, the 2D accelerator blew the very highly rated Trio64 and ViRGE clean out of the water, ATI's Mach64 (used in all ATI Rage series cards) was even worse. Mystique's 2D accelerator would not be beaten until the ridiculously fast 2D of Voodoo Banshee and Voodoo3 arrived; This is excusable, a Voodoo3 was faster in 2D applications than both a Geforce4 Ti and a Radeon9700. Mystique's 2D performance was, however, eclipsed by the contemporary Millennium, which was much more expensive and used faster memory to achieve its performance. Mystique's SGRAM particularly aided the load patterns encountered with texture mapping, less so for the patterns associated with GUI operations, which are mostly block moves and BLITs. The second display output here eluded identification for a long while, it's a 15 pin male D connector which isn't a VESA standard. It turns out this connector is not connected to anything until a Rainbow Runner card is added, at which point the following pinout takes over: 3+4 - S-video out 6+7 - S-video in 8 - Composite in 5 - Composite out 10-15 - Chassis ground Thanks to David Young for providing the part Core: 1 pipeline, 1 TMU, 66MHz (66 million texels per second) RAM: 64 bit SDRAM, 100MHz, 640MB/s Shader: None |

|||||||||||||||||||||

| [NONE] |

SiS 6326 4 MB - 1997

This was in the workshop very briefly in around 1999. A greybeard tech's eyes weren't what they used to be and he needed me to solder the VGA port back down. It passed testing, but was the slowest 3D accelerator I'd ever actually seen. It did roughly DirectX 5. Memory on the card could have been FPM, EDO, or SDRAM, which would give it bandwidth anywhere between 360 MB/s (45 MHz FPM) to 1,060 MB/s (133 MHz SDRAM). FPM supported effective cycle times of 45-80 MHz, EDO from 45-90 MHz, and SDRAM ran 66 MHz to 133 MHz. As a very low end 3D accelerator in around 1999, this would be seen with 66-100 MHz SDRAM. It ran the video clock at 40 MHz and had one pixel pipeline with one texture mapping unit. Simple texture operations could be single-cycled, so basic shaded games ran relatively well. SiS included a DVD decoder in hardware and was had a TV output encoder on the chip. SiS released a single beta OpenGL ICD, which implemented most of OpenGL 1.1. This allowed it to run Quake 2, if poorly. The aim of this chip was pure flexibility. It could run any available memory, in configurations from 2 to 8 MB, and could run on both the PCI and AGP buses. With 8 MB and 133 MHz SDRAM, it actually wasn't all that bad and could hit around 70% of an ATI Rage Pro (which ran a much faster clock). With EDO RAM, it would struggle to reach half a Rage Pro's already somewhat mediocre performance. Core: 1 pipeline, 1 TMU, 40 MHz (40 million texels per second) RAM: 64 bit SDRAM, 100MHz, 640MB/s (typically) Shader: None |

|||||||||||||||||||||

|

Diamond Stealth II S220 4 MB - 1997

Rendition were quite a big name in the early days of 3D video cards, at least as big as anyone who wasn't 3DFX could be, but the Verite V2100 saw little demand to begin with. Clocked at 45 MHz (a BIOS update later removed the V2100 from the market by clocking it up to V2200 levels of 55 MHz!) it was a single cycle part able to keep up with and slightly exceed the Voodoo Graphics. It gets better. For just $50, this also offered a degree of video acceleration and, the GPU being actually a fully programmable RISC core, was planned to be integrated with a Fujitsu FXG-1 geometry processor to give it a transformation and lighting engine. That was planned for 1998, several years before Nvidia would release Geforce. Not only that, but at least one V2x00 card was released with AGP and PCI, one on each edge of the card. Core: 1 pipeline, 1 TMU, 55MHz (55 million texels per second) RAM: 64 bit SGRAM, ~66MHz, ~528 MB/s Shader: None Thanks to Ars AV forum member SubPar |

|||||||||||||||||||||

|

ATI Xpert@Play 98 - 1998

The Rage Pro was the successor to ATI's original venture into integrated 2D/3D acceleration, the Mach64-GT (later renamed 3D Rage). ATI's cards were often sold in the retail channel as "Xpert@Work" or "Xpert@Play", the latter featuring ATI's ImpacTV chip to provide TV-out via composite or S-video. By 1999 and 2000, these were still in retail channels as ATI cleared inventory - They were most popular during 1998 and had actually been released in mid-1997. Many were the older 4 MB variant with a SODIMM slot, so ATI simply added a cheap 4 MB SODIMM to be able to sell them as the 8 MB card. When opening a retail box, one could never be sure exactly which card would be inside, two versions of the 8 MB card existed as well as this SODIMM-equipped card. This one was provided by Bryan McIntosh, but I had one myself way back then. It was quite the adventure. ATI's drivers were, to a word, atrocious. The Rage Pro chip ran very hot (but didn't require a heatsink) and had a somewhat limited feature set. It was incapable of alpha blending (specifically, it could alpha add, but not alpha modulate), instead using a horrible stipple effect, and wasn't perspective correct at texture mapping, though it claimed both as features! On paper, the Rage Pro was slightly better than a Voodoo Graphics and a little worse than the Nvidia Riva 128. In practise, it was worse than both. Part of the fault was the Rage Pro hardware itself, many operations took more cycles on the Rage Pro than they did on a Voodoo, but all the common operations (simple bilinear texture mapping) were single cycle so this shouldn't have hurt performance much. The real problem, as ATI were about to make into a characteristic of all their products, was poor drivers. Rage Pro was the first to support partial DVD decoding, it could offload motion compensation. This is exposed in some driver revisions via DXVA. Derivations of the Rage Pro, typically the Rage XL (a direct die shrink), continued to be sold until 2006, where they'd often find their way onto server motherboards with a few old SDRAM chips next to them to give 8 MB. ATI later replaced this with the ES1000, a weird thing by itself. It was based on the ATI RV100 chip, a cost-reduced chip hacking off half the render back end and removing the DX7 transformation and lighting engine entirely and sold as the Radeon VE or Radeon 7000. The world of the late 1990s was a very busy place in video cards. One could get offerings from S3, 3DFX, SiS (such as the SiS 6325), Rendition, Nvidia, Intel, ATI at prices ranging from £25 to £200. ATI, at this time, was yet to find its feet and Rage Pro did not make many inroads among people wanting PC games. Core: 1 pipeline, 1 TMU, 83MHz (83 million texels per second) RAM: 64 bit 100 MHz SDDRAM, 800 MB/s peak Shader: None |

|||||||||||||||||||||

|

Diamond Monster 3D II 12 MB (Voodoo2) - 1998 The Voodoo2 used an array of custom ASICs to do one thing and do it extremely well. It was a texture filter and mapping system. The texture mappers, which handled bilinear filtering and texture mapping, are the chips marked "500-0010-01" and exist as a pair. The whole card was only one pixel pipeline, the two TMUs being arranged one after the other, giving a 1x2 architecture. This meant that the full force of the Voodoo2 was only felt in games using two textures (or more) on the same surface. The original Voodoo also supported this mode, but wasn't seen on the PC using it. Arcade System Boards like Atari's Flagstaff, did use this mode and a pair of Voodoo TMUs to one FBI. Quake II used the second texture layer for light maps, Unreal used it for detail texture and macrotexturing. Both games ran far more quickly on Voodoo2 than they did on the competition. The card's 12 MB of memory can easily be seen here to be separated into three chunks of four DRAM chips each. The upper two segments, by the TMUs, are texture memory for the TMUs. The other block, at the bottom right of this image, is for the control engine. The memory dedicated to each chip, so this Voodoo2 could only fit 4 MB of textures at any one time, some high-end cards had 6 MB per-TMU. The control engine itself is the chip labelled "500-0009-01" is the Framebuffer Interface (FBI) and handled the framebuffer, assembled the frame in the framebuffer (which was in the control engine's memory) and handled the PCI bus interface. The third row of printing on the chips shows the manufacture date. The TMUs are "9814", the control engine is "9815", refering to weeks 14 and 15 in 1998. Voodoo2 ran its pixel pipeline at 90 MHz and could texture a pixel with two bilinear filtered textures in a single cycle. Voodoo2's performance was so great that when Intel released the i740, the marketing fluff was very proud that the i740 was "up to 80% of the performance of a Voodoo2". This was, of course, single texturing, an i740 was under a third of the performance of a Voodoo2 in multitexturing. The weakness of the Voodoo2 was that it had absolutely no 2D engine. It could not be used as a "video card", it was exclusively a 3D renderer. This is why it has two VGA ports on the back, one was an input from the existing video card, the other an output. The Voodoo2 would take over when a game was started, this meant that games could not be played in windowed mode, only fullscreen. As Voodoo2 was almost twice the clock of Voodoo Graphics and had double the per-clock rendering power, it was straight up 3.7 times faster than Voodoo Graphics, already the market leader. The connector at the top left was Voodoo2's trump card. Already the fastest video card for miles around, it could almost double its performance by collaborating with a second Voodoo2! In SLI (Scan-Line Interleave) mode, the two Voodoos would take turns drawing each line of the resulting frame, meaning that each had only half the work to do and were twice as fast. A pair of Voodoo2s in SLI was not beaten by any great degree until Geforce arrived. Even the mighty TNT2 Ultra was only about 30% faster. 3DFX's SLI was one of its massive advantages and to this day remains the most effective multi-GPU solution ever devised. At the time, games would see 40% to 80% improvement, but normally a plot of resolution vs framerate would flatten off at higher resolutions as the system became video limited and flatten off at the lower resolutions as it became CPU limited. Voodoo2 SLI was so fast that it never scaled up or down very much at all at different resolutions; It was entirely CPU limited in almost every game. Only when CPUs became much faster and the Voodoo2 SLI still kept up was it realised that the actual improvement from the video card was around 95% to 100% and it was held back by that the fastest possible CPU (A PentiumII 450) was not fast enough. SLI was also immune to the microstuttering which alternate-frame rendering produces. Each frame is different, so some render faster than others. This causes Nvidia SLI or ATI CrossFire cards to massively vary the framerate from one frame to the next, a phenomenon known as microstuttering. As both GPUs worked on the same frame in 3DFX SLI, there was perfectly smooth rendering throughout. This meant that Voodoo2, in SLI mode, was almost eight times faster than its immediate predecessor. In a world where per-generation improvements of 10-20% are the norm, 800% was just absurd. Core: 1 pipelines, 2 TMU per pipeline, 90 MHz (180 million texels per second) RAM: 64 bit SDRAM (three buses), 90 MHz, 2,160 MB/s Shader: None Supplied by Ars AV forum member supernova imposter | |||||||||||||||||||||

|

Matrox Mystique G200 8MB - 1998 Matrox knew which way the wind was blowing and saw the joke that the original Third time lucky, perhaps? G200 was released in 1998 with a great fanfare. This time, it'd support popular APIs like Direct3D and OpenGL, it had full AGP support, a pair of fast 64 bit internal buses (one in, one out) and, on the Millennium version, dual ported SGRAM. The Millennium and Mystique both used the exact same PCB, though the Mystique was clocked a little lower, 84 MHz for the core and 112 MHz for the memory, and used normal SDRAM. I am not aware of any way to tell between them other than looking up the part numbers of the RAM used, in this case 8ns (120 MHz at CL3, 100 MHz at CL2) plain SDRAM. It was fast, power hungry (first video card to need its own heatsink, if you look below the barcode, it even has provision for fan power!), very advanced with trilinear filtering and 32 bit rendering...And a complete disaster. OpenGL was extremely important and the G200 simply didn't do it. QuakeII wouldn't run on it, a deal breaker for many. Matrox released a quirky OpenGL-to-Direct3D wrapper which was dog slow, then released a minigl driver which only worked for QuakeII. Even the Direct3D support was lacking on release, textures would pop in and out, mipmaps would flicker. Later drivers cured much of the problem, but it wasn't until a year after G400's release that proper OpenGL support arrived on the G200. The G200 would only be notable for its high performance Windows accelerator, but it would soon lose even that crown to the Voodoo Banshee. Matrox were expecting the highly advanced and very highly performing (on paper) G200 to sell much more than it did, so provided numerous expansion connectors on the card. The largest is for an additional 8MB VRAM (which appears to be a SODIMM slot!), but the others could be used to add Matrox products like the Rainbow Runner motion-JPEG processing card. The highly performing (on paper) G200 turned out to not be quite so highly performing (on release) when pitted against a Voodoo2, it was as much as 80% of the Voodoo2's performance in less intensive games but often under 20% of the Voodoo2 in QuakeII, where QuakeII's multitexturing and OpenGL did not favour the single texture mapper and API translation layer of the G200 at all. Unreal, a native Direct3D game, was faster than QuakeII but the multitexturing Voodoo2 was still put itself far beyond the G200, the Matrox card able to run at best with just over half the Voodoo2's performance. In games, then, the G200 was a very expensive Riva 128 or Rage Pro. This, along with products such as the S3 Savage 2000, the ATI Rage Fury and the ATI Radeon 8500 prove for once and all that driver development cannot be done as an afterthought. Core: 1 pipeline, 1 TMU, 84MHz (84 million texels per second) RAM: 64 bit SDRAM, 112MHz, 896MB/s Shader: None Supplied by Ars AV forum member supernova imposter |

|||||||||||||||||||||

|

Matrox Millennium G200 8MB - 1998 The Millennium version of the G200 was marginally faster and quite a lot more expensive. Here's some data to chew on:

The Mystique sold quite a lot better (which isn't saying much really) than its negligibly overclocked brother. The SGRAM didn't help the Millennium much, very few operations need to read and write at the same time which is SGRAM's only real advantage. The G200's best note was that the impossibly high-priced G200 MMS ($1,799!) which fitted four G200s on the same card to drive four DVI displays via two dual-link DVI connectors and two dual-link to single-link adapter cables. This crazy arrangement (which worked quite well with Matrox PowerDesk) remained the only quad-display capable single video card until well into 2010s. Alas, this is not the MMS, it is the Millennium. After a while, by mid-1999, Matrox had dropped the Mystique entirely and reduced the price on the Millennium to match. Core: 1 pipeline, 1 TMU, 90MHz (90 million texels per second) RAM: 64 bit SDRAM, 120MHz, 960MB/s Shader: None Supplied by Ars AV forum member supernova imposter |

|||||||||||||||||||||

|

Protec AG240D 8MB (Intel i740) - 1998 Intel's i740 was one of the most hotly anticipated video products of 1997 and 1998. Intel was coming to wipe out both incumbents and upstarts alike with its semiconductoring might. The Intel behemoth would leave only ruins in its wake. The i740 was a strange beast. It was developed using technology Intel bought from Lockheed Martin's Real3D division when Intel bought the division. It did not, however, work much like any other 3D accelerator. Firstly it was the first AGP video card but it did AGP in a very strange way. AGP has a feature called DiME, Direct Memory Execution, where a video card can read textures directly from system RAM. i740 had more rendering hardware available to it than any competing video card except Voodoo2 and could single-cycle more operations than any other video card, so its performance was widely expected to be extreme. Intel released it with considerable fanfare in 1998, with press expectations that everyone else would be killed off by the presence of the Intel juggernaught. At least until performance reviews arrived. i740 didn't ever keep textures in local RAM, instead using local RAM entirely as a framebuffer and Z-buffer. It streamed them over the AGP bus using DiME, so in games it was contending RAM with the CPU, and games were known to be bandwidth intensive. Removing one's AGP chipset drivers and forcing the card into PCI mode (where DiME is unavailable) actually increased the performance on the 740! A refresh, i752, was released slightly afterwards but Intel exited the discrete video business almost immediately. i754 was an improvement further, and i754 was integrated into the Intel i815 chipset (i752 was in i810 chipset) and provided Intel's onboard video right up until the late Pentium4 era, four years later. A big architectural shakeup was cancelled in 2000 when Capitola, a GPU to be launched with the Timna processor, which was a 2001/02 Pentium-III with the chipset built in, so memory controller and an i754-based IGP. It would be natively RDRAM, but as RDRAM turned out to be primarily Rambus defrauding its shareholders, Timna was cancelled. Timna, designed at Intel Haifa, Israel, helped the later Banias project (Pentium-M), which was somewhat more successful. i752 added 16-tap anisotropic filtering (never enabled in the drivers), MPEG2 motion compensation (two years after ATI), texture compression (never enabled in the drivers), embossed bump mapping (never enabled...) but kept the stupid DiME-only texture mapping. Intel was to continue this technology in the really, really weird AGP Inline Memory Module, which was a small 4 MB memory module with an AGP connection! It allowed dedicated memory to the Intel 815 chipset's VGA core (which was based on the i752) to improve its performance. Unfortunately, Intel priced it well over what it should have been, and it was almost the same cost to just stick a TNT2 M64 in the AGP port, which was much faster in every way. It couldn't store performance critical texture data (because of the i740 heritage) but could store Z-buffer data. It was not a great performance uplift. The 40 pin female connector just above the 26 pin VESA feature connector is unknown, possibly some extension to the VESA standard. Also on this card is a Winbond EEPROM which holds the VGA BIOS, and a 66.6667 MHz oscillator. Astute readers may ask why an AGP card needs a 66 MHz clock: Pin 7 on side B of the AGP port carries a 66 MHz clock! This is because that AGP does not guarantee the clock is 66 MHz, only that it should never be much above it. Like PCI, it doesn't specify a minimum clock, only a maximum. Some early motherboards ran the AGP port at 2x PCI clock, and could run PCI at 25 MHz. For example, Super 7 boards running a 75 MHz FSB would usually run PCI at either FSB/2 (37.5 MHz, and out of spec) or FSB/3 (25 MHz and within spec). If these boards, such as those based on the ALI Aladdin V chipset, then clocked AGP at PCI x2, the potential AGP clock could be 75 MHz, and well out of spec. This is why 75 MHz bus speeds were rare, it was far easier to cleanly clock the rest of the system from a 66 MHz or an 83.3 MHz clock. Discrete i740s weren't made in large numbers and were quite rare, making this one of our rarer video cards. Core: 1 pipeline, 1 TMU, 66MHz (66 million texels per second) RAM: 64 bit SDRAM, 100MHz, 640MB/s Shader: None | |||||||||||||||||||||

|

Diamond Viper V550 16 MB - 1998

Diamond's "Viper" series were their high-end offerings, typically aimed at gamers. This one featured Nvidia's Riva TNT two-pipeline graphics processor which was very mixed. When it worked, usually on an Intel 440BX (with an overclocked Celeron, natch), it worked very well. When it didn't work, it was awful. The list of what didn't work was quite long. Two full pixel pipelines took a lot of silicon, and there wasn't much left for complexity in the bus interface to work around edge cases. TNTs had never been happy with the ALI Aladdin V chipset (Socket 7) and neither was the TNT2. Initially, the TNT did not at all like the Unreal engine which powered quite a few games. Nvidia were quick and furious with driver support, patching ALI's lame AGP as best they could and quickly bringing a full OpenGL ICD to the table. Throughout Detonator driver refreshes, features and performance were added near constantly. The TNT needed a fast CPU and a bit of patience, but it was faster than anything short of a pair of Voodoo2s in SLI and could go to much higher resolutions: 1920x1200 at 85 Hz anyone? Core: 2 pipelines, 1 TMU per pipeline, 90 MHz (180 million texels per second) RAM: 128 bit SDRAM, 100MHz, 1600 MB/s Shader: None Thanks to Ars AV forum member SubPar |

|||||||||||||||||||||

|

Number Nine SR9 (S3 Savage4 LT) 8MB - 1999 S3, after the disastrous ViRGE, tried to reclaim their lost position as the world's best video chipset maker with the Savage 4. Before the Savage 3, they'd released the Savage3D, a complete abandonment of the ViRGE/Trio3D. The Savage3D was good, but in a world dominated by 3DFX, not good enough, and too difficult to produce. Only Hercules bothered with a Savage3D part and they soon regretted it. The Savage4 addressed these concerns and added multitexturing to the chipset's repertoire, but the Savage4 was a single pipeline, dual TMU design. It behaved like a 1x2 (e.g. Voodoo3) part because it could precombine textures, that is, sample and filter two textures at the same time using one TMU, but could not do so in one cycle like a true 1x2 card could. Savage4 thus did not suffer a fillrate penalty when dual-texturing, but ONLY when dual-texturing. Three texture layers couldn't be optimised like this, the first two were, but the third took another pass. It was innovative and saved silicon area but inflexible since it couldn't be used with alpha blending, texture modulation or anything other than plain old texture mapping. Savage4 only had 64 bit memory, half the speed of offerings from ATI, Nvidia and 3dfx. In all, it wasn't too bad a card (and had hardware texture compression, later licensed by Microsoft for DXTC) and sold quite a few units. The Savage4 LT, as seen here, removed the precombiner and reduced clock to only 110MHz. It would never be a performer, but it required very little cooling (the same as most northbridges!) and was instead a competitor to ATI's Rage Pro LT for the portable market in laptops which, in terms of performance, it wiped the floor with. Savage4 also introduced S3TC (now part of DXTC), texture compression which allowed very high resolution textures to be used on the Savage4 with little to no performance impact. This, as well as single pass trilinear filtering, gave the Savage4 exceptional output quality. Since both Quake3 and Unreal Tournament shipped with out of the box support for S3TC, the Savage4 was very well recieved by gamers wanting more visual quality. Core: 1 pipeline, 1 TMU, 110MHz (110 million texels per second) RAM: 64 bit SDRAM, 110MHz, 880MB/s Shader: None |

|||||||||||||||||||||

|

3dfx Voodoo3 3000 16MB - 1998 The Voodoo3 was a competitive solution, but too little and too late. 3dfx had neglected development of Rampage and caused numerous delays in their product cycle. As a result, Voodoo3 was their swansong. As a 3D accelerator it was fairly standard fare for the day, a 1x2 design (probably a mistake, 2x1 was much more powerful in reality) with DirectX 6 compliance, single cycle trilinear filtering, w and z based fogging, perspective correct texturing, 166,000,000 pixels/second fillrate and 333,000,000 multitextured pixels/second. Overclocked Voodoo3s were the first cards to pass 400 million pixels per second fillrate giving them a theoretical maximum framerate of 508fps at 1024x768. Voodoo3 was the "Avenger" project, a revised version of "Banshee" with an extra texture mapper to tide the company over until Rampage arrived. However, problems with Banshee and Avenger meant manpower was taken from the Rampage project and it was delayed numerous times. Then, work on VSA100 delayed Rampage so much that the company never recovered. Avenger itself was the most advanced of the pre-T&L video cards, featuring advanced vertex processing such as guardband culling, hardware clipping and the powerful 2D accelerator from Banshee which was one of the highest performing 2D GDI accelerators available and retained this title until Windows Vista introduced desktop composition. Only "Napalm" (VSA-100) was more advanced on the pre-T&L front, but it was released long after GeForce was. While drivers were stable, they did not expose the full power of Avenger. The guardband culler was only active if forced-on or in a select few Glide3 games, the line antialiasing was never enabled. Very tellingly, the last 1.0.8 drivers which leaked out (see below for link), were upwards of 40% faster than launch drivers in some applications. There was still more performance to be had, but the company had a lot of trouble exposing it. On paper, Avenger's texel and triangle throughput was superior to everything around it and it was the first gaming video card to feature properly working OpenGL support (TNT2 rapidly caught up here). After the demise of 3DFX, enthusiasts continued to tweak and refine the drivers until an actual working transformation offload engine was implemented. It wasn't DX7 class transformation and lighting, but the transformation bit was there: Albeit done partially on the CPU. 3DFX billed Voodoo3 as "the integration of 7 chips into one" which is a bit amusing, as it counted the six chips on Voodoo2 SLI and the one on Banshee. It was, however, just a very fast single Voodoo2, so an integration of just two chips: The Voodoo Banshee and a Voodoo2 TMU. Controversially, Voodoo3 featured a pre-RAMDAC filter which resulted in the 32 bit rendering being dithered to 16 bit in the framebuffer, then the output being filtered to "22 bit" in the RAMDAC (the filter was either 1x4 or 2x2) which could not be captured on screenshots. This filter removed most of the ordered dither artefacts and recovered quite a bit of image quality. Since Voodoo3 only supported 16 bit textures it then appeared to screenshots that Voodoo3 was outputting plain 16bit displays, which it wasn't doing. The Avenger core operated to 8 bit per channel precision (24/32 bit) when performing pixel operations (but 16 bits when doing texture operations), so did not suffer the banding artefacts that strictly 16 bit cores did (e.g. the Banshee) yet due to how the framebuffer was stored, screenshots appeared to have some (but not as true 16-bit rendering) artefacts when they were not seen on screen. This card was, like alll Voodoos, locked to core and RAM being sychronised, but it managed to hit 205MHz on both from a standard 166MHz. Voodoo3 did not remove some of the important limitations which its ancestors had. Voodoo3 could only use 256x256 textures (or below), initially had difficulty rendering in windowed mode and was limited to 800x600 in some games due to their reliance on earlier versions of Glide. Drivers: Windows2000, WindowsXP (enhanced customised driver). Included with Windows2000, WindowsXP, most Linuxes. Core: 1 pipeline, 2 TMU, 166MHz (333 million texels per second) RAM: 128 bit SDRAM, 166MHz, 2666MB/s Shader: None |

|||||||||||||||||||||

|

3dfx Voodoo3 3000 PCI - 1998 The PCI version of the Voodoo3 performed next to identically with the AGP version (Voodoos did not support AGP-specific features and just used it as a fast PCI slot, as everything AGP-specific either hurt performance or didn't much help) but was slightly more expensive due to higher demand; A top notch video card on the PCI bus wasn't common back then. What the positions for more RAM were about is unknown, all Voodoo3s had 16MB except the Velocity derivatives with 8MB. Lower density SDRAM probably is the answer: There are four unused SDRAM positions and all AGP Voodoos used eight 16-bit wide chips. Using 32 bit wide DRAMs helped save on component cost. Core: 1 pipeline, 2 TMU, 166MHz (333 million texels per second) RAM: 128 bit SDRAM, 166MHz, 2666MB/s Shader: None |

|||||||||||||||||||||

|

ATI Rage 128 Pro 16MB - 1999 Usually sold as the Rage Fury Pro or Xpert 2000 Pro. Unlike the Xpert 2000 (based on the original Rage 128GL) the memory interface was not reduced to 64 bit. While the card doesn't look like the Xpert 2000 Pro, it has identical specs and the card itself carries no label as to what it might be sold as. I suspect we're looking at one of the OEM Rage 128 Pro 16MB parts. It wasn't a great card by its release in late 1999. The 16MB model could just about keep up with a Voodoo3 3000, but the Voodoo3 3500 would be too much. That's not bad considering that the Voodoo3 3000 ran at 166MHz and the Rage 128 Pro only 124MHz...but it also shows that 3dfx were getting better yields than ATI were on their chips. ATI's major problem, one which would haunt them until R300, was their lacklustre driver support. Their parts would run far below their theoretical maxima simply because the drivers were no good. The Pro variant tidied up triangle setup to be able to handle 8 million triangles a second (double what the Rage 128 could handle, but only equal to the Voodoo3 and slightly less than the TNT2 Ultra), fixed some bugs in bilinear texture filtering, added S3TC support, added a TDMS transmitter for DVI support (you can see where a DVI connector could have been fitted to this card) and improved AGP support to allow use in 4x motherboards. Core: 2 pipeline, 1 TMU, 125MHz (250 million texels per second) RAM: 128 bit SDRAM, 143MHz, 2288MB/s Shader: None |

|||||||||||||||||||||

|

Matrox Millennium G400 16 MB (G4+M4A16DG) - 1999 The G200, the successor to the History was about to repeat itself, so many observed at the time. The G400 was loaded with advanced technology, such as two unidirectional 128 bit internal buses (one in and one out, G200 had the same but as a pair of 64 bit buses) and the same 2x1 architecture as the powerful TNT2, giving it a peak fillrate matching its rivals. The G400 was locked to RGBA 8888 internally, unable to drop precision to 16 bit (RGB 565 or RGBA 4444), Matroxbilled this as improving the G400's display quality, when in fact it simply reduced performance in games which used 16 bit textures on a 32 bit display, very common in the days before DXTC. The G400's triangle setup engine was billed as the industry's first programmable setup engine, but it appears that Matrox did a poor job at programming it (it couldn't be programmed by anything other than the display driver), as both the TNT2 and the Voodoo3 had hugely higher triangle throughput: The G400 was observed to be extremely reliant on the host CPU. While ATI fixed this problem with a refresh of the Rage128, Matrox never did. It did some entirely forgotten Bitboys stuff too. Likely a hoax. Did history repeat itself? It sure did! The G400 lacked a passable OpenGL ICD until the middle of 2000, almost a year after release, an almost exact repeat of the very same mistakes which killed the G200! Much noise was made about the G400's somewhat primitive DVD acceleration but to be perfectly fair, even those who had ATI Rage 128 cards (which could offload almost the entire DVD decoding pathway) would usually be using a software decoder. The G400 was eventually a passable DirectX 6.0 accelerator, comparable to the TNT2 Ultra and Voodoo3 3500, but also was the first performance card to support dual displays. Some ATI models had it, such as the Rage LT, but it was usually a curiosity or a way for a laptop to use an external monitor in extended desktop mode, it wasn't a feature on performance cards. To do this "DualHead" feature, the G400 had to use an external RAMDAC, something which hadn't been seen since the dark ages. DualHead was a good feature, but not everything Matrox made it out to be. The second RAMDAC was much lower in clock than the first, so the secondary display had to run at a lower resolution and lower refresh rate, somewhat limiting its ability to run high resolution displays. GDI performance, such as dragging windows, was noticably much slower with DualHead turned on, but it didn't really matter. Matrox had found a new market segment in productivity users who found they benefited greatly from the ability to run two monitors without two video cards. Matrox's excellent Powerdesk driver control panel didn't hurt either. The G400 initially sold rather modestly but as DualHead took off and word spread, sales picked up to a rather brisk pace. This card doesn't have the second display output, gamers were all tightly bunched pants over Voodoo3 and TNT2 (later Geforce), so was likely bought for Matrox's other strength, its VGA output quality. Only the Voodoo 3 could match the filtering quality of the G200 and G400, VGA being an analog signal and so requiring tightly specified RF filtering components (usually an L-C network) to avoid "muddiness" and horizontal smearing of things like text. This particular card was provided by [b]silver7[/b], however I had one myself back in the day (about 2003). It was passable, but disappointing. Depending on the task, it was between 40% and 60% of the performance of a Voodoo3 3000 clocked at 205 MHz. It did well in 3DMark 99 and 3DMark 2001, but handled poorly in Serious Sam, Neverwinter Nights and ZD WinBench - The latter being a GUI oriented productivity test, which one would expect Matrox to do well in. Futher investigation found that Matrox wasn't particularly bad in Ziff Davis' Winbench, 3dfx was particularly good. Notably, a 92 MHz Voodoo Banshee could beat a 200 MHz GeForce 2. Core: 2 pipeline, 1 TMU per pipeline, 125MHz (250 million texels per second) RAM: 128 bit SDRAM, 166 MHz, 2,666 MB/s Shader: None Provided by Ars AV forum member sliver7 |

|||||||||||||||||||||

|

Innovision TNT2 M64 16MB - 1999 The TNT2, when new, was a decent enough 3D accelerator. However, when it was new the year was reading as 1998. This Innovision card has a build date on the back as week 31 2002, over four years after its first introduction and contemporary to the Radeon 9700! The TNT2 M64 (and the Vanta) had its memory bus cut in half from 128bit to 64bit (halving the speed) to lower component count and PCB size, and was used commonly as a bare bones monitor controller by the likes of Dell. Its 3D performance left a lot to be desired but in the early P4 machines (which also left a lot to be desired) the chipsets had no integrated display, so a bottom of the barrel solution was required. Architecturally the TNT2 is a 2x1 design and, at 150MHz, is cable of 300,000,000 pixels/second fillrate regardless of how many textures are applied per pixel. The clocks ranged from 80MHz to 150MHz, the 150MHz part being the ferociously expensive TNT2 Ultra (which could even best a Voodoo3 3500 in some games), the 80MHz being the abysmal TNT2 Vanta LT which was so incredibly slow that a Voodoo Banshee and the original Riva TNT could beat it easily. Drivers: Vendor Driver Download. Included with most Linuxes, WindowsXP, Windows 2000. Warning: Windows XP installs a 5x.xx derived driver, BUT offers a much older driver (a 3x.xx version) on Windows Update. Do not install the one from Windows Update, it causes severe problems up to and including total failure of the GDI engine. This goes for all TNT2 cards. Core: 2 pipeline, 1 TMU, 125MHz (250 million texels per second) RAM: 64 bit SDRAM, 150MHz, 1200MB/s Shader: None |

|||||||||||||||||||||

|

MSI 3D AGPhantom TNT2 M64 32MB (MS8808) - 1999 As stated above, the TNT2 M64 was very weak even when new and, of course, the amount of video memory is not nearly so important as how fast it is. Video cards have their own memory because they need it to be local and very fast, but the M64 defeats all of that by using commodity SDRAM chips, just like PC133 DIMMs did. This cut down on cost a great deal but really killed performance. This one had a very low profile heatsink on it with a very small fan. The fan, being so close to the heatsink base, blew air back up into its own bearing and so became clogged with dust, grinding and vibrating - This happens to small fans on any low-clearance system, be it a motherboard, video card or anywhere. That's why this card was replaced. It wasn't ever going to play any games or even run Google Earth, it just sat there doing nothing. This card is contemporary with the older TNT2 below and was one of the first M64s (which were laughed out of town even then!), far older than the one above. The fact that there are two M64s in this collection alone tells a lot about how popular they were among integrators, builders and OEMs. They gave cheap slow video to systems which would otherwise have no video. The Geforce2MX and Geforce4MX would later replace it, even though the M64 remained in production until after the GF2MX was discontinued! A financial success story, but that was its only success. Core: 2 pipeline, 1 TMU, 125MHz (250 million texels per second) RAM: 64 bit SDRAM, 150MHz, 1200MB/s Shader: None |

|||||||||||||||||||||

|

Anonymous TNT2 - 1999 Well then, what the hell is this? It has no identifying marks on the PCB at all, except a sticker on the back cunningly labelled "LRI 2811". It has no UL number and its only other distinctive feature is the Brooktree/Conexant BT689 TV encoder, not at all very common on TNT2 cards. Is it a Pro, a Vanta, an Ultra, an M64? We just can't tell. It's unlikely to be an Ultra as they would be branded. Time to put the detective hat on. LRI is Leadtek's old numbering system, so we know we have a Leadtek card; This is sensible, they were a major Nvidia partner even back then. How much memory? As we can see we're using Samsung K4S643232C-TC70 memory. That memory is 512kbit x 32bit x 4 banks and we know it's 32 bits wide (2Mx32), giving us a total bus width of 32bit x 4 chips = 128 bits. It is not a Vanta or an M64. From the TC-70, we know the RAM is 7ns cycle length. Simply inverting this (Hz is s^-1) tells us it's 142.8MHz - or 143MHz. Which model TNT2 had 143MHz RAM? That's easy enough to find out and, indeed, we learn this card is the TNT2 Pro. Finally, how much memory? The K4S643232C, according to its datasheet is 67,108,864 bits which is easily derivable to 8MB. Four 8MB chips is obviously 32MB. We have a Leadtek 32MB TNT2 Pro with TV out, enough information for us to discover that it's the Leadtek WinFast 3D S320 II TNT2 Pro 32 MB + TV-out The TNT2 Pro, when new, was a 143MHz TNT2 with 166MHz SDRAM as standard. This is clearly not standard, we don't have 166MHz SDRAM, we have 143MHz. It's perfectly possible that we either have 166MHz RAM being run at very relaxed timings (Video cards don't much care for RAM latency) or that we have 143MHz RAM being run with standard timings and without plugging it into a system, we have no way of knowing for sure...or do we? Turns out that Packard Bell used these video cards and give us a nice spec list: 150MHz memory. This puts our Winfast S320 between the TNT2 and the TNT2 Pro: The TNT2 had a 125MHz video core, this has a 143MHz. The TNT2 had 150MHz memory, this has 150MHz memory. The TNT2 Pro had a 143MHz video core like this, but had 166MHz RAM unlike this. Have a look at Dan's Very Old review of three TNT2s. Our very same card is at the bottom, just printed with what it is on the PCB but otherwise identical; Obviously I have the OEM version. It should be noted that Dan reviews the S320 (which was 125MHz video core) while this is the S320 II with a 143MHz video core. Also that this card is TWO WHOLE YEARS older than the much slower TNT2 M64 just above and has twice the memory. Drivers: Unsupported by vendor. Included with most Linuxes, WindowsXP, Windows 2000. Core: 2 pipeline, 1 TMU, 143MHz (286 million texels per second) RAM: 128 bit SDRAM, 150MHz, 2400MB/s Shader: None |

Hattix hardware images are licensed under a Creative Commons Attribution 2.0 UK: England & Wales License except where otherwise stated.

Hattix hardware images are licensed under a Creative Commons Attribution 2.0 UK: England & Wales License except where otherwise stated.